Kandinsky 2.1 inherits best practices from DALL-E 2 and Latent Diffusion while introducing some new ideas.

Installation

bash

pip install diffusers transformers accelerate

Code example

python

from diffusers import DiffusionPipeline

import torch

pipe_prior = DiffusionPipeline.from_pretrained("kandinsky-community/kandinsky-2-1-prior", torch_dtype=torch.float16)

pipe_prior.to("cuda")

t2i_pipe = DiffusionPipeline.from_pretrained("kandinsky-community/kandinsky-2-1", torch_dtype=torch.float16)

t2i_pipe.to("cuda")

prompt = "A alien cheeseburger creature eating itself, claymation, cinematic, moody lighting"

negative_prompt = "low quality, bad quality"

generator = torch.Generator(device="cuda").manual_seed(12)

image_embeds, negative_image_embeds = pipe_prior(prompt, negative_prompt, guidance_scale=1.0, generator=generator).to_tuple()

image = t2i_pipe(prompt, negative_prompt=negative_prompt, image_embeds=image_embeds, negative_image_embeds=negative_image_embeds).images[0]

image.save("cheeseburger_monster.png")

To learn more about the Kandinsky pipelines, and more details about speed and memory optimizations, please have a look at the [docs](https://huggingface.co/docs/diffusers/main/en/api/pipelines/kandinsky).

Thanks ayushtues, for helping with the integration of Kandinsky 2.1!

UniDiffuser

UniDiffuser introduces a multimodal diffusion process that is capable of handling different generation tasks using a single unified approach:

* Unconditional image and text generation

* Joint image-text generation

* Text-to-image generation

* Image-to-text generation

* Image variation

* Text variation

Below is an example of how to use UniDiffuser for text-to-image generation:

python

import torch

from diffusers import UniDiffuserPipeline

model_id_or_path = "thu-ml/unidiffuser-v1"

pipe = UniDiffuserPipeline.from_pretrained(model_id_or_path, torch_dtype=torch.float16)

pipe.to("cuda")

This mode can be inferred from the input provided to the `pipe`.

pipe.set_text_to_image_mode()

prompt = "an elephant under the sea"

sample = pipe(prompt=prompt, num_inference_steps=20, guidance_scale=8.0).images[0]

sample.save("elephant.png")

Check out the UniDiffuser [docs](https://huggingface.co/docs/diffusers/main/en/api/pipelines/unidiffuser) to know more.

UniDiffuser was added by dg845 in [this PR](https://github.com/huggingface/diffusers/pull/2963).

LoRA

We're happy to support the A1111 formatted CivitAI LoRA checkpoints in a limited capacity.

First, download a checkpoint. We’ll use [this one](https://civitai.com/models/13239/light-and-shadow) for demonstration purposes.

bash

wget https://civitai.com/api/download/models/15603 -O light_and_shadow.safetensors

Next, we initialize a `DiffusionPipeline`:

python

import torch

from diffusers import StableDiffusionPipeline, DPMSolverMultistepScheduler

pipeline = StableDiffusionPipeline.from_pretrained(

"gsdf/Counterfeit-V2.5", torch_dtype=torch.float16, safety_checker=None

).to("cuda")

pipeline.scheduler = DPMSolverMultistepScheduler.from_config(

pipeline.scheduler.config, use_karras_sigmas=True

)

We then load the checkpoint downloaded from CivitAI:

python

pipeline.load_lora_weights(".", weight_name="light_and_shadow.safetensors")

(If you’re loading a checkpoint in the `safetensors` format, please ensure you have `safetensors` installed.)

And then it’s time for running inference:

python

prompt = "masterpiece, best quality, 1girl, at dusk"

negative_prompt = ("(low quality, worst quality:1.4), (bad anatomy), (inaccurate limb:1.2), "

"bad composition, inaccurate eyes, extra digit, fewer digits, (extra arms:1.2), large breasts")

images = pipeline(prompt=prompt,

negative_prompt=negative_prompt,

width=512,

height=768,

num_inference_steps=15,

num_images_per_prompt=4,

generator=torch.manual_seed(0)

).images

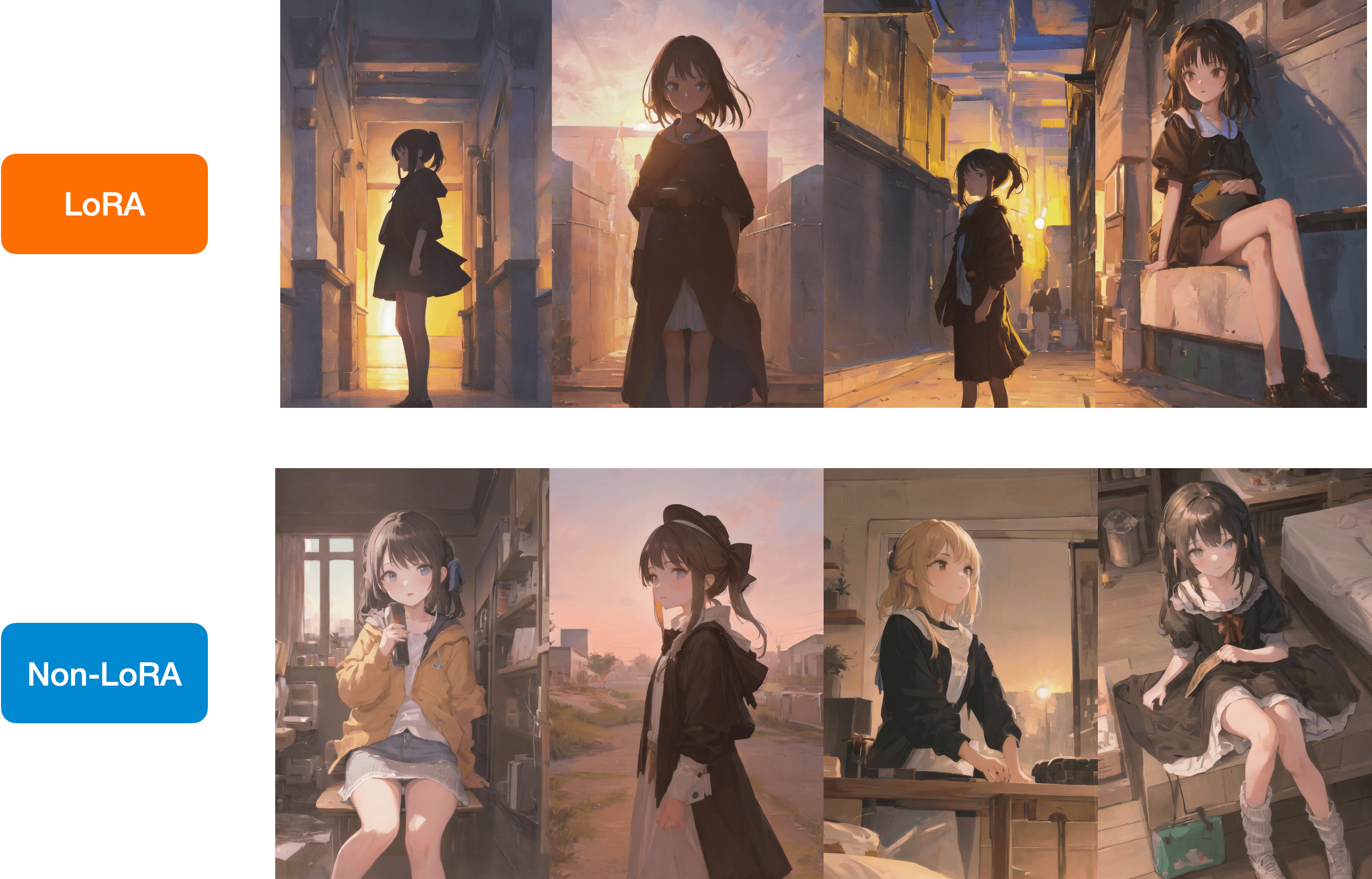

Below is a comparison between the LoRA and the non-LoRA results:

Check out [the docs](https://huggingface.co/docs/diffusers/main/en/training/lora#supporting-a1111-themed-lora-checkpoints-from-diffusers) to learn more.

Thanks to takuma104 for contributing this feature via [this PR](https://github.com/huggingface/diffusers/pull/3437).

Torch 2.0 Compile Speed-up

We introduced Torch 2.0 support for computing attention efficiently in 0.13.0. Since then, we have made a number of improvements to ensure the number of "graph breaks" in our models is reduced so that the models can be compiled with `torch.compile()`. As a result, we are happy to report massive improvements in the inference speed of our most popular pipelines. Check out [this doc](https://huggingface.co/docs/diffusers/main/en/optimization/torch2.0) to know more.

Thanks to Chillee for helping us with this. Thanks to patrickvonplaten for fixing the problems stemming from "graph breaks" in [this PR](https://github.com/huggingface/diffusers/pull/3286).

VAE pre-processing

We added a Vae Image processor class that provides a unified API for pipelines to prepare their image inputs, as well as post-processing their outputs. It supports resizing, normalization, and conversion between PIL Image, PyTorch, and Numpy arrays.

With that, all Stable diffusion pipelines now accept image inputs in the format of Pytorch Tensor and Numpy array, in addition to PIL Image, and can produce outputs in these 3 formats. It will also accept and return latents. This means you can now take generated latents from one pipeline and pass them to another as inputs, without leaving the latent space. If you work with multiple pipelines, you can pass Pytorch Tensor between them without converting to PIL Image.

To learn more about the API, check out our doc [here](https://huggingface.co/docs/diffusers/main/en/api/image_processor)

ControlNet Img2Img & Inpainting

ControlNet is one of the most used diffusion models and upon strong demand from the community we added controlnet img2img and controlnet inpaint pipelines.

This allows to use any controlnet checkpoint for both image-2-image setting as well as for inpaint.

:point_right: **Inpaint**: See controlnet inpaint model [here](https://huggingface.co/lllyasviel/control_v11p_sd15_inpaint)

:point_right: **Image-to-Image**: Any controlnet checkpoint can be used for image to image, e.g.:

py

from diffusers import StableDiffusionControlNetImg2ImgPipeline, ControlNetModel, UniPCMultistepScheduler

from diffusers.utils import load_image

import numpy as np

import torch

import cv2

from PIL import Image

download an image

image = load_image(

"https://hf.co/datasets/huggingface/documentation-images/resolve/main/diffusers/input_image_vermeer.png"

)

np_image = np.array(image)

get canny image

np_image = cv2.Canny(np_image, 100, 200)

np_image = np_image[:, :, None]

np_image = np.concatenate([np_image, np_image, np_image], axis=2)

canny_image = Image.fromarray(np_image)

load control net and stable diffusion v1-5

controlnet = ControlNetModel.from_pretrained("lllyasviel/sd-controlnet-canny", torch_dtype=torch.float16)

pipe = StableDiffusionControlNetImg2ImgPipeline.from_pretrained(

"runwayml/stable-diffusion-v1-5", controlnet=controlnet, torch_dtype=torch.float16

)

speed up diffusion process with faster scheduler and memory optimization

pipe.scheduler = UniPCMultistepScheduler.from_config(pipe.scheduler.config)

pipe.enable_model_cpu_offload()

generate image

generator = torch.manual_seed(0)

image = pipe(

"futuristic-looking woman",

num_inference_steps=20,

generator=generator,

image=image,

control_image=canny_image,

).images[0]

Diffedit Zero-Shot Inpainting Pipeline

This pipeline (introduced in [DiffEdit: Diffusion-based semantic image editing with mask guidance](https://arxiv.org/abs/2210.11427)) allows for image editing with natural language. Below is an end-to-end example.

First, let’s load our pipeline:

python

import torch

from diffusers import DDIMScheduler, DDIMInverseScheduler, StableDiffusionDiffEditPipeline

sd_model_ckpt = "stabilityai/stable-diffusion-2-1"

pipeline = StableDiffusionDiffEditPipeline.from_pretrained(

sd_model_ckpt,

torch_dtype=torch.float16,

safety_checker=None,

)

pipeline.scheduler = DDIMScheduler.from_config(pipeline.scheduler.config)

pipeline.inverse_scheduler = DDIMInverseScheduler.from_config(pipeline.scheduler.config)

pipeline.enable_model_cpu_offload()

pipeline.enable_vae_slicing()

generator = torch.manual_seed(0)

Then, we load an input image to edit using our method:

python

from diffusers.utils import load_image

img_url = "https://github.com/Xiang-cd/DiffEdit-stable-diffusion/raw/main/assets/origin.png"

raw_image = load_image(img_url).convert("RGB").resize((768, 768))

Then, we employ the source and target prompts to generate the editing mask:

python

source_prompt = "a bowl of fruits"

target_prompt = "a basket of fruits"

mask_image = pipeline.generate_mask(

image=raw_image,

source_prompt=source_prompt,

target_prompt=target_prompt,

generator=generator,

)

Then, we employ the caption and the input image to get the inverted latents:

python

inv_latents = pipeline.invert(prompt=source_prompt, image=raw_image, generator=generator).latents

Now, generate the image with the inverted latents and semantically generated mask:

python

image = pipeline(

prompt=target_prompt,

mask_image=mask_image,

image_latents=inv_latents,

generator=generator,

negative_prompt=source_prompt,

).images[0]

image.save("edited_image.png")

Check out the [docs](https://huggingface.co/docs/diffusers/main/en/api/pipelines/diffedit) to learn more about this pipeline.

Thanks to clarencechen for contributing this pipeline in [this PR](https://github.com/huggingface/diffusers/pull/2837).

Docs

* [Distributed inference with multiple GPUs](https://huggingface.co/docs/diffusers/main/en/training/distributed_inference) ([PR](https://github.com/huggingface/diffusers/issues/3010))

* [Attention processor](https://github.com/huggingface/diffusers/issues/3010) ([PR](https://github.com/huggingface/diffusers/pull/3474))

* [Load different Stable Diffusion formats](https://huggingface.co/docs/diffusers/main/en/using-diffusers/other-formats) ([PR](https://github.com/huggingface/diffusers/pull/3534))

Apart from these, we have made multiple improvements to the overall quality-of-life of our docs.

Thanks to stevhliu for leading the charge here.

Misc

* xformers attention processor fix when using LoRA ([PR](https://github.com/huggingface/diffusers/pull/3556) by takuma104)

* Pytorch 2.0 SDPA implementation of the LoRA attention processor ([PR](https://github.com/huggingface/diffusers/pull/3594))

All commits

* Post release for 0.16.0 by patrickvonplaten in 3244

* [docs] only mention one stage by pcuenca in 3246

* Write model card in controlnet training script by pcuenca in 3229

* [2064]: Add stochastic sampler (sample_dpmpp_sde) by nipunjindal in 3020

* [Stochastic Sampler][Slow Test]: Cuda test fixes by nipunjindal in 3257

* Remove required from tracker_project_name by pcuenca in 3260

* adding required parameters while calling the get_up_block and get_down_block by init-22 in 3210

* [docs] Update interface in repaint.mdx by ernestchu in 3119

* Update IF name to XL by apolinario in 3262

* fix typo in score sde pipeline by fecet in 3132

* Fix typo in textual inversion JAX training script by jairtrejo in 3123

* AudioDiffusionPipeline - fix encode method after config changes by teticio in 3114

* Revert "Revert "[Community Pipelines] Update lpw_stable_diffusion pipeline"" by patrickvonplaten in 3265

* Fix community pipelines by patrickvonplaten in 3266

* update notebook by yiyixuxu in 3259

* [docs] add notes for stateful model changes by williamberman in 3252

* [LoRA] quality of life improvements in the loading semantics and docs by sayakpaul in 3180

* [Community Pipelines] EDICT pipeline implementation by Joqsan in 3153

* [Docs]zh translated docs update by DrDavidS in 3245

* Update logging.mdx by standardAI in 2863

* Add multiple conditions to StableDiffusionControlNetInpaintPipeline by timegate in 3125

* Let's make sure that dreambooth always uploads to the Hub by patrickvonplaten in 3272

* Diffedit Zero-Shot Inpainting Pipeline by clarencechen in 2837

* add constant learning rate with custom rule by jason9075 in 3133

* Allow disabling torch 2_0 attention by patrickvonplaten in 3273

* [doc] add link to training script by yiyixuxu in 3271

* temp disable spectogram diffusion tests by williamberman in 3278

* Changed sample[0] to images[0] by IliaLarchenko in 3304

* Typo in tutorial by IliaLarchenko in 3295

* Torch compile graph fix by patrickvonplaten in 3286

* Postprocessing refactor img2img by yiyixuxu in 3268

* [Torch 2.0 compile] Fix more torch compile breaks by patrickvonplaten in 3313

* fix: scale_lr and sync example readme and docs. by sayakpaul in 3299

* Update stable_diffusion.mdx by mu94-csl in 3310

* Fix missing variable assign in DeepFloyd-IF-II by gitmylo in 3315

* Correct doc build for patch releases by patrickvonplaten in 3316

* Add Stable Diffusion RePaint to community pipelines by Markus-Pobitzer in 3320

* Fix multistep dpmsolver for cosine schedule (suitable for deepfloyd-if) by LuChengTHU in 3314

* [docs] Improve LoRA docs by stevhliu in 3311

* Added input pretubation by isamu-isozaki in 3292

* Update write_own_pipeline.mdx by csaybar in 3323

* update controlling generation doc with latest goodies. by sayakpaul in 3321

* [Quality] Make style by patrickvonplaten in 3341

* Fix config dpm by patrickvonplaten in 3343

* Add the SDE variant of DPM-Solver and DPM-Solver++ by LuChengTHU in 3344

* Add upsample_size to AttnUpBlock2D, AttnDownBlock2D by will-rice in 3275

* Rename --only_save_embeds to --save_as_full_pipeline by arrufat in 3206

* [AudioLDM] Generalise conversion script by sanchit-gandhi in 3328

* Fix TypeError when using prompt_embeds and negative_prompt by At-sushi in 2982

* Fix pipeline class on README by themrzmaster in 3345

* Inpainting: typo in docs by LysandreJik in 3331

* Add `use_Karras_sigmas` to LMSDiscreteScheduler by Isotr0py in 3351

* Batched load of textual inversions by pdoane in 3277

* [docs] Fix docstring by stevhliu in 3334

* if dreambooth lora by williamberman in 3360

* Postprocessing refactor all others by yiyixuxu in 3337

* [docs] Improve safetensors docstring by stevhliu in 3368

* add: a warning message when using xformers in a PT 2.0 env. by sayakpaul in 3365

* StableDiffusionInpaintingPipeline - resize image w.r.t height and width by rupertmenneer in 3322

* [docs] Adapt a model by stevhliu in 3326

* [docs] Load safetensors by stevhliu in 3333

* [Docs] Fix stable_diffusion.mdx typo by sudowind in 3398

* Support ControlNet v1.1 shuffle properly by takuma104 in 3340

* [Tests] better determinism by sayakpaul in 3374

* [docs] Add transformers to install by stevhliu in 3388

* [deepspeed] partial ZeRO-3 support by stas00 in 3076

* Add omegaconf for tests by patrickvonplaten in 3400

* Fix various bugs with LoRA Dreambooth and Dreambooth script by patrickvonplaten in 3353

* Fix docker file by patrickvonplaten in 3402

* fix: deepseepd_plugin retrieval from accelerate state by sayakpaul in 3410

* [Docs] Add `sigmoid` beta_scheduler to docstrings of relevant Schedulers by Laurent2916 in 3399

* Don't install accelerate and transformers from source by patrickvonplaten in 3415

* Don't install transformers and accelerate from source by patrickvonplaten in 3414

* Improve fast tests by patrickvonplaten in 3416

* attention refactor: the trilogy by williamberman in 3387

* [Docs] update the PT 2.0 optimization doc with latest findings by sayakpaul in 3370

* Fix style rendering by pcuenca in 3433

* unCLIP scheduler do not use note by williamberman in 3417

* Replace deprecated command with environment file by jongwooo in 3409

* fix warning message pipeline loading by patrickvonplaten in 3446

* add stable diffusion tensorrt img2img pipeline by asfiyab-nvidia in 3419

* Refactor controlnet and add img2img and inpaint by patrickvonplaten in 3386

* [Scheduler] DPM-Solver (++) Inverse Scheduler by clarencechen in 3335

* [Docs] Fix incomplete docstring for resnet.py by Laurent2916 in 3438

* fix tiled vae blend extent range by superlabs-dev in 3384

* Small update to "Next steps" section by pcuenca in 3443

* Allow arbitrary aspect ratio in IFSuperResolutionPipeline by devxpy in 3298

* Adding 'strength' parameter to StableDiffusionInpaintingPipeline by rupertmenneer in 3424

* [WIP] Bugfix - Pipeline.from_pretrained is broken when the pipeline is partially downloaded by vimarshc in 3448

* Fix gradient checkpointing bugs in freezing part of models (requires_grad=False) by 7eu7d7 in 3404

* Make dreambooth lora more robust to orig unet by patrickvonplaten in 3462

* Reduce peak VRAM by releasing large attention tensors (as soon as they're unnecessary) by cmdr2 in 3463

* Add min snr to text2img lora training script by wfng92 in 3459

* Add inpaint lora scale support by Glaceon-Hyy in 3460

* [From ckpt] Fix from_ckpt by patrickvonplaten in 3466

* Update full dreambooth script to work with IF by williamberman in 3425

* Add IF dreambooth docs by williamberman in 3470

* parameterize pass single args through tuple by williamberman in 3477

* attend and excite tests disable determinism on the class level by williamberman in 3478

* dreambooth docs torch.compile note by williamberman in 3471

* add: if entry in the dreambooth training docs. by sayakpaul in 3472

* [docs] Textual inversion inference by stevhliu in 3473

* [docs] Distributed inference by stevhliu in 3376

* [{Up,Down}sample1d] explicit view kernel size as number elements in flattened indices by williamberman in 3479

* mps & onnx tests rework by pcuenca in 3449

* [Attention processor] Better warning message when shifting to `AttnProcessor2_0` by sayakpaul in 3457

* [Docs] add note on local directory path. by sayakpaul in 3397

* Refactor full determinism by patrickvonplaten in 3485

* Fix DPM single by patrickvonplaten in 3413

* Add `use_Karras_sigmas` to DPMSolverSinglestepScheduler by Isotr0py in 3476

* Adds local_files_only bool to prevent forced online connection by w4ffl35 in 3486

* [Docs] Korean translation (optimization, training) by Snailpong in 3488

* DataLoader respecting EXIF data in Training Images by Ambrosiussen in 3465

* feat: allow disk offload for diffuser models by hari10599 in 3285

* [Community] reference only control by okotaku in 3435

* Support for cross-attention bias / mask by Birch-san in 2634

* do not scale the initial global step by gradient accumulation steps when loading from checkpoint by williamberman in 3506

* Fix bug in panorama pipeline when using dpmsolver scheduler by Isotr0py in 3499

* [Community Pipelines]Accelerate inference of stable diffusion by IPEX on CPU by yingjie-han in 3105

* [Community] ControlNet Reference by okotaku in 3508

* Allow custom pipeline loading by patrickvonplaten in 3504

* Make sure Diffusers works even if Hub is down by patrickvonplaten in 3447

* Improve README by patrickvonplaten in 3524

* Update README.md by patrickvonplaten in 3525

* Run `torch.compile` tests in separate subprocesses by pcuenca in 3503

* fix attention mask pad check by williamberman in 3531

* explicit broadcasts for assignments by williamberman in 3535

* [Examples/DreamBooth] refactor save_model_card utility in dreambooth examples by sayakpaul in 3543

* Fix panorama to support all schedulers by Isotr0py in 3546

* Add open parti prompts to docs by patrickvonplaten in 3549

* Add Kandinsky 2.1 by yiyixuxu ayushtues in 3308

* fix broken change for vq pipeline by yiyixuxu in 3563

* [Stable Diffusion Inpainting] Allow standard text-to-img checkpoints to be useable for SD inpainting by patrickvonplaten in 3533

* Fix loaded_token reference before definition by eminn in 3523

* renamed variable to input_ and output_ by vikasmech in 3507

* Correct inpainting controlnet docs by patrickvonplaten in 3572

* Fix controlnet guess mode euler by patrickvonplaten in 3571

* [docs] Add AttnProcessor to docs by stevhliu in 3474

* [WIP] Add UniDiffuser model and pipeline by dg845 in 2963

* Fix to apply LoRAXFormersAttnProcessor instead of LoRAAttnProcessor when xFormers is enabled by takuma104 in 3556

* fix dreambooth attention mask by linbo0518 in 3541

* [IF super res] correctly normalize PIL input by williamberman in 3536

* [docs] Maintenance by stevhliu in 3552

* [docs] update the broken links by brandonJY in 3568

* [docs] Working with different formats by stevhliu in 3534

* remove print statements from attention processor. by sayakpaul in 3592

* Fix temb attention by patrickvonplaten in 3607

* [docs] update the broken links by kadirnar in 3577

* [UniDiffuser Tests] Fix some tests by sayakpaul in 3609

* 3487 Fix inpainting strength for various samplers by rupertmenneer in 3532

* [Community] Support StableDiffusionTilingPipeline by kadirnar in 3586

* [Community, Enhancement] Add reference tricks in README by okotaku in 3589

* [Feat] Enable State Dict For Textual Inversion Loader by ghunkins in 3439

* [Community] CLIP Guided Images Mixing with Stable DIffusion Pipeline by TheDenk in 3587

* fix tests by patrickvonplaten in 3614

* Make sure we also change the config when setting `encoder_hid_dim_type=="text_proj"` and allow xformers by patrickvonplaten in 3615

* goodbye frog by williamberman in 3617

* update code to reflect latest changes as of May 30th by prathikr in 3616

* update dreambooth lora to work with IF stage II by williamberman in 3560

* Full Dreambooth IF stage II upscaling by williamberman in 3561

* [Docs] include the instruction-tuning blog link in the InstructPix2Pix docs by sayakpaul in 3644

* [Kandinsky] Improve kandinsky API a bit by patrickvonplaten in 3636

* Support Kohya-ss style LoRA file format (in a limited capacity) by takuma104 in 3437

* Iterate over unique tokens to avoid duplicate replacements for multivector embeddings by lachlan-nicholson in 3588

* fixed typo in example train_text_to_image.py by kashif in 3608

* fix inpainting pipeline when providing initial latents by yiyixuxu in 3641

* [Community Doc] Updated the filename and readme file. by kadirnar in 3634

* add Stable Diffusion TensorRT Inpainting pipeline by asfiyab-nvidia in 3642

* set config from original module but set compiled module on class by williamberman in 3650

* dreambooth if docs - stage II, more info by williamberman in 3628

* linting fix by williamberman in 3653

* Set step_rules correctly for piecewise_constant scheduler by 0x1355 in 3605

* Allow setting num_cycles for cosine_with_restarts lr scheduler by 0x1355 in 3606

* [docs] Load A1111 LoRA by stevhliu in 3629

* dreambooth upscaling fix added latents by williamberman in 3659

* Correct multi gpu dreambooth by patrickvonplaten in 3673

* Fix from_ckpt not working properly on windows by LyubimovVladislav in 3666

* Update Compel documentation for textual inversions by pdoane in 3663

* [UniDiffuser test] fix one test so that it runs correctly on V100 by sayakpaul in 3675

* [docs] More API fixes by stevhliu in 3640

* [WIP]Vae preprocessor refactor (PR1) by yiyixuxu in 3557

* small tweaks for parsing thibaudz controlnet checkpoints by williamberman in 3657

* move activation dispatches into helper function by williamberman in 3656

* [docs] Fix link to loader method by stevhliu in 3680

* Add function to remove monkey-patch for text encoder LoRA by takuma104 in 3649

* [LoRA] feat: add lora attention processor for pt 2.0. by sayakpaul in 3594

* refactor Image processor for x4 upscaler by yiyixuxu in 3692

* feat: when using PT 2.0 use LoRAAttnProcessor2_0 for text enc LoRA. by sayakpaul in 3691

* Fix the Kandinsky docstring examples by freespirit in 3695

* Support views batch for panorama by Isotr0py in 3632

* Fix from_ckpt for Stable Diffusion 2.x by ctrysbita in 3662

* Add draft for lora text encoder scale by patrickvonplaten in 3626

Significant community contributions

The following contributors have made significant changes to the library over the last release:

* nipunjindal

* [2064]: Add stochastic sampler (sample_dpmpp_sde) (3020)

* [Stochastic Sampler][Slow Test]: Cuda test fixes (3257)

* clarencechen

* Diffedit Zero-Shot Inpainting Pipeline (2837)

* [Scheduler] DPM-Solver (++) Inverse Scheduler (3335)

* Markus-Pobitzer

* Add Stable Diffusion RePaint to community pipelines (3320)

* takuma104

* Support ControlNet v1.1 shuffle properly (3340)

* Fix to apply LoRAXFormersAttnProcessor instead of LoRAAttnProcessor when xFormers is enabled (3556)

* Support Kohya-ss style LoRA file format (in a limited capacity) (3437)

* Add function to remove monkey-patch for text encoder LoRA (3649)

* asfiyab-nvidia

* add stable diffusion tensorrt img2img pipeline (3419)

* add Stable Diffusion TensorRT Inpainting pipeline (3642)

* Snailpong

* [Docs] Korean translation (optimization, training) (3488)

* okotaku

* [Community] reference only control (3435)

* [Community] ControlNet Reference (3508)

* [Community, Enhancement] Add reference tricks in README (3589)

* Birch-san

* Support for cross-attention bias / mask (2634)

* yingjie-han

* [Community Pipelines]Accelerate inference of stable diffusion by IPEX on CPU (3105)

* dg845

* [WIP] Add UniDiffuser model and pipeline (2963)

* kadirnar

* [docs] update the broken links (3577)

* [Community] Support StableDiffusionTilingPipeline (3586)

* [Community Doc] Updated the filename and readme file. (3634)

* TheDenk

* [Community] CLIP Guided Images Mixing with Stable DIffusion Pipeline (3587)

* prathikr

* update code to reflect latest changes as of May 30th (3616)