IOPaint Beta Release

<img width="1654" alt="截屏2024-01-05 17 01 00" src="https://github.com/Sanster/lama-cleaner/assets/3998421/0e1c5db0-c996-4dfa-82f6-c8ed45a342d9">

It's been a while since the last release of **lama-cleaner**(now renamed to IOPaint.), partly due to the fact that during this time I have released my own first macOS application [OptiClean](https://opticlean.io/) and started playing Baldur's Gate 3, which has taken up a lot of my free time. Apart from time constraints, another reason is because the code for the project has become increasingly complex, making it difficult to add new features. I was hesitant to make changes to the code, but in the end, I made the decision to completely refactor the front-end and back-end code of the project, hoping that this change will help the project moving forward.

The refactoring includes: switch to [Vite](https://github.com/vitejs/vite), new css framework [tailwindcss](https://tailwindcss.com/), new state management library [zustand](https://zustand-demo.pmnd.rs/), new UI library [shadcn/ui](https://ui.shadcn.com/), using more modern python libraries such as [fastapi](https://github.com/tiangolo/fastapi) and [typer](https://github.com/tiangolo/typer). These refactors were painful and involved significant changes, but I think they were worth it. They made the project structure clearer, easier to add new features, and more accessible for others to contribute code.

Although I am not an AIGC artist or creator, I enjoy developing tools and I wanted to make this project more practical for inpainting and outpainting needs. Below are the new features/models that have been added with this beta release:

New cli command: Batch processing

After `pip install iopaint`, you can use the `iopaint` command in the command line. `iopaint start` is the command for starting the model service and web interface, while `iopaint run` is used for batch processing. You can use `iopaint start --help` or `iopaint run --help` to view the supported arguments.

Better API doc

Thanks to fastapi, now all backend interfaces have clear API documentation. After starting the service with `iopaint start`, you can access `http://localhost:8080/docs` to view the API documentation.

<img width="1477" alt="image" src="https://github.com/Sanster/lama-cleaner/assets/3998421/4c531d7a-c189-4439-be5a-04b242a12e1f">

Support all sd1.5/sd2/sdxl normal or inpainting model from HuggingFace

Previously, lama-cleaner only supported the built-in `anything4` and `realisticVision1.4` SD inpaint models. `iopaint start --model` now supports automatically downloading models from HuggingFace, such as `Lykon/dreamshaper-8-inpainting`. You can [search](https://huggingface.co/models?sort=likes&search=inpaint) available sd inpaint models on HuggingFace. Not only inpaint models, but you can also specify regular sd models, such as `Lykon/dreamshaper-8`. The downloaded models can be seen and switched on the front-end page.

<img width="1654" alt="截屏2024-01-05 17 01 21" src="https://github.com/Sanster/lama-cleaner/assets/3998421/548d67c9-6abc-430b-b24d-83bce8bce681">

Single file `ckpt`/`safetensors` models are also supported. **IOPaint** will search model under `stable_diffusion` folder in the `--model-dir` (default value ~/.cache) directory.

<img width="636" alt="image" src="https://github.com/Sanster/lama-cleaner/assets/3998421/3ad14659-6029-421e-84bc-6962c02ce112">

Outpainting

You can use `Extender` to expand images, with optional directions of x, y, and xy. You can use the built-in expansion ratio or adjust the expansion area yourself.

<img width="336" alt="image" src="https://github.com/Sanster/lama-cleaner/assets/3998421/5f278a72-ccbe-4e05-b7eb-7ea9566a1669">

https://github.com/Sanster/lama-cleaner/assets/3998421/ae4e6560-03d3-4911-822a-c482de503f2a

Generate mask from segmentation model

`RemoveBG` and `Anime Segmentation` are two segmentation models. In previous versions, they could only be used to remove the background of an image. Now, the results from these two models can be used to generate masks for inpainting.

<img width="388" alt="image" src="https://github.com/Sanster/lama-cleaner/assets/3998421/2e624e93-2f22-46a2-85f0-48eb5a8b7058">

Enpand or shrink mask

It is possible to extend or shrink interactive segmentation mask or removebg/anime segmentation mask.

<img width="283" alt="image" src="https://github.com/Sanster/lama-cleaner/assets/3998421/b3602f99-2d6f-4363-ae73-f6ba1808ab44">

More samplers

I have added more samplers based on this Issue [A1111 <> Diffusers Scheduler mapping 4167](https://github.com/huggingface/diffusers/issues/4167).

<img width="268" alt="image" src="https://github.com/Sanster/lama-cleaner/assets/3998421/9a7f9439-d6a3-4998-992a-1f02cda49913">

LCM Lora

https://huggingface.co/docs/diffusers/main/en/using-diffusers/inference_with_lcm_lora

Latent Consistency Models (LCM) enable quality image generation in typically 2-4 steps making it possible to use diffusion models in almost real-time settings. The model will be downloaded on first use.

<img width="291" alt="image" src="https://github.com/Sanster/lama-cleaner/assets/3998421/5147b10d-8837-4957-afa4-b049e490eb63">

FreeU

https://huggingface.co/docs/diffusers/main/en/using-diffusers/freeu

FreeU is a technique for improving image quality. Different models may require different FreeU-specific hyperparameters, you can find how to adjust these parameters in the original project: https://github.com/ChenyangSi/FreeU

<img width="282" alt="image" src="https://github.com/Sanster/lama-cleaner/assets/3998421/5a479a3b-9bc9-412a-90e4-63291dfe950d">

New Models

- [MIGAN](https://github.com/Picsart-AI-Research/MI-GAN): Much smaller (27MB) and faster than other inpainting models(LaMa is 200MB), it can also achieve good results.

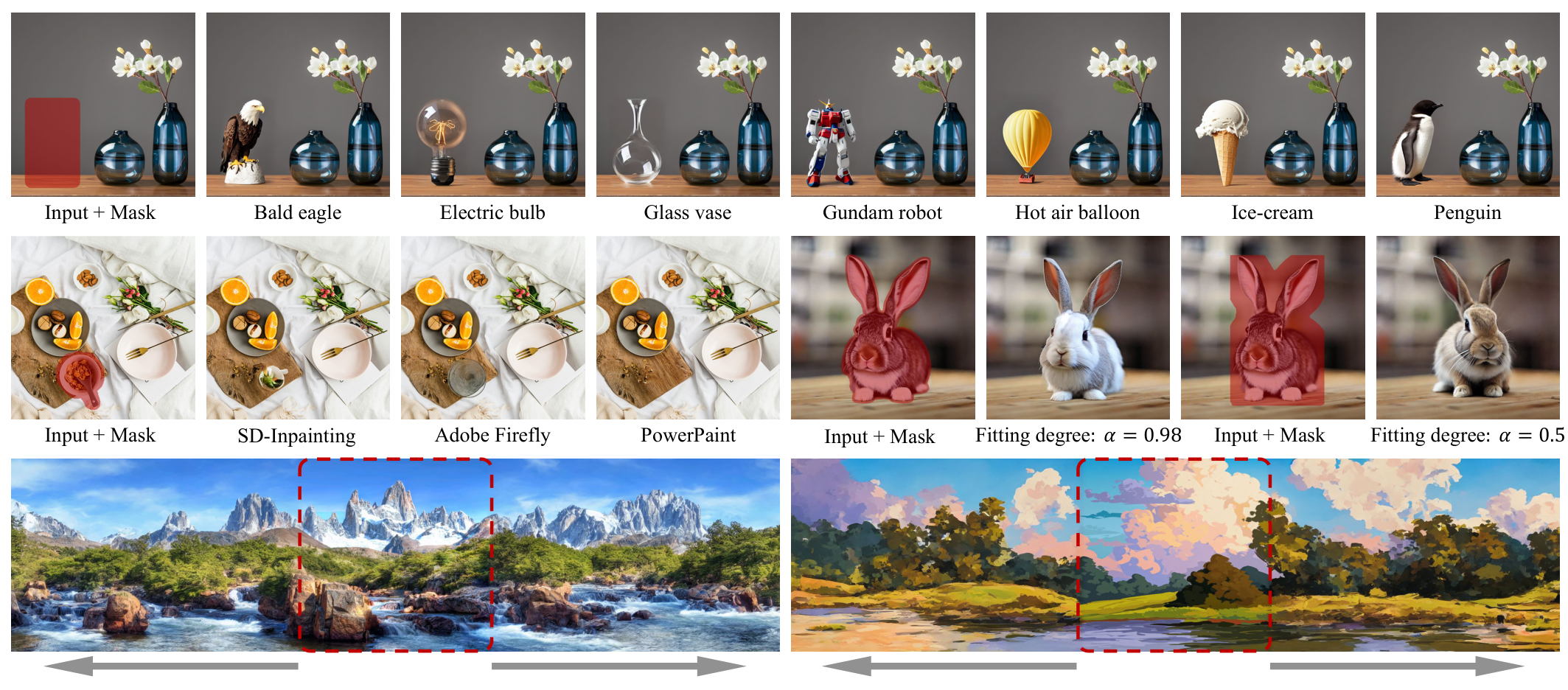

- [PowerPaint](https://github.com/zhuang2002/PowerPaint): `--model Sanster/PowerPaint-V1-stable-diffusion-inpainting` PowerPaint is a stable diffusion model optimized for inpainting/outpainting/remove_object tasks. We can control the model's results by specifying the **task**.

- [Kandinsky 2.2 inpaint model](https://huggingface.co/kandinsky-community/kandinsky-2-2-decoder-inpaint): `--model kandinsky-community/kandinsky-2-2-decoder-inpaint`

- [SDXL inpaint model](https://huggingface.co/diffusers/stable-diffusion-xl-1.0-inpainting-0.1): `--model diffusers/stable-diffusion-xl-1.0-inpainting-0.1`

- [MobileSAM](https://github.com/ChaoningZhang/MobileSAM?tab=readme-ov-file): `--interactive-seg-model mobile_sam` Lightweight SAM model, faster and requires fewer resources. Currently, there are many other variations of the SAM model. Feel free to submit a PR!

Other improvement

- If FileManager is enabled, you can use `left` or `right` to switch Image

- fix icc_profile loss issue(The color of the image changed globally after inpainting)

- fix exif rotate issue

Window installer user

For 1-click window installer users, first of all, I would like to thank you for your support. The current version of IOPaint is a beta version, and I will update the installation package after the official release of IOPaint.

Maybe a cup of coffee

During the development process, lots of coffee was consumed. If you find my project useful, please consider buying me a cup of coffee. Thank you! ❤️. https://ko-fi.com/Z8Z1CZJGY