This is the release of TorchServe v0.8.0.

New Features

1. **Supported [large model inference](https://github.com/pytorch/serve/blob/614bfc0a382d809d5fd59d0dbc130c57e67c3332/docs/large_model_inference.md?plain=1#L1) in distributed environment 2193 2320 2209 2215 2310 2218 lxning HamidShojanazeri**

TorchServe added the deep integration to support large model inference. It provides PyTorch native large model inference solution by integrating [PiPPy](https://github.com/pytorch/tau/tree/main/pippy). It also provides the flexibility and extensibility to support other popular libraries such as Microsoft Deepspeed, and HuggingFace Accelerate.

2. **Supported streaming response for GRPC 2186 and HTTP 2233 lxning**

To improve UX in Generative AI inference, TorchServe allows for sending intermediate token response to client side by supporting [GRPC server side streaming](https://github.com/pytorch/serve/blob/614bfc0a382d809d5fd59d0dbc130c57e67c3332/docs/grpc_api.md?plain=1#L74) and [HTTP 1.1 chunked encoding ](https://github.com/pytorch/serve/blob/614bfc0a382d809d5fd59d0dbc130c57e67c3332/docs/inference_api.md?plain=1#L103).

3. **Supported PyTorch/XLA on GPU and TPU 2182 morgandu**

By leveraging `torch.compile` it's now possible to run torchserve using XLA which is optimized for both GPU and TPU deployments.

4. **Implemented [New Metrics platform](https://github.com/pytorch/serve/issues/1492) #2199 2190 2165 namannandan lxning**

TorchServe fully supports [metrics](https://github.com/pytorch/serve/blob/master/docs/metrics.md#introduction) in Prometheus mode or Log mode. Both frontend and backend metrics can be configured in a [central metrics YAML file](https://github.com/pytorch/serve/blob/master/docs/metrics.md#central-metrics-yaml-file-definition).

5. **Supported map based model config YAML file. 2193 lxning**

Added [config-file](https://github.com/pytorch/serve/blob/2f1f52f553e83703b5c380c2570a36708ee5cafa/model-archiver/README.md?plain=1#L119) option for model config to model archiver tool. Users is able to flexibly define customized parameters in this YAML file, and easily access them in backend handler via variable [context.model_yaml_config](https://github.com/pytorch/serve/blob/2f1f52f553e83703b5c380c2570a36708ee5cafa/docs/configuration.md?plain=1#L267). This new feature also made TorchServe easily support the other new features and enhancements.

6. **Refactored PT2.0 support 2222 msaroufim**

We've refactored our model optimization utilities, improved logging to help debug compilation issues. We've also now deprecated `compile.json` in favor of using the new YAML config format, follow our guide here to learn more https://github.com/pytorch/serve/blob/master/examples/pt2/README.md the main difference is while archiving a model instead of passing in `compile.json` via `--extra-files` we can pass in a `--config-file model_config.yaml`

7. **Supported user specified gpu deviceIds for a model 2193 lxning**

By default, TorchServe uses a round-robin algorithm to assign GPUs to a worker on a host. Starting from v0.8.0, TorchServe allows users to define [deviceIds](https://github.com/pytorch/serve/blob/614bfc0a382d809d5fd59d0dbc130c57e67c3332/model-archiver/README.md?plain=1#L175) in the [model_config.yaml.](https://github.com/pytorch/serve/blob/614bfc0a382d809d5fd59d0dbc130c57e67c3332/model-archiver/README.md?plain=1#L162) to assign GPUs to a model.

8. **Supported cpu model on a GPU host 2193 lxning**

TorchServe supports hybrid mode on a GPU host. Users are able to define [deviceType](https://github.com/pytorch/serve/blob/614bfc0a382d809d5fd59d0dbc130c57e67c3332/model-archiver/README.md?plain=1#L174) in model config YAML file to deploy a model on CPU of a GPU host.

9. **Supported Client Timeout 2267 lxning**

TorchServe allows users to define [clientTimeoutInMills](https://github.com/pytorch/serve/blob/614bfc0a382d809d5fd59d0dbc130c57e67c3332/frontend/archive/src/main/java/org/pytorch/serve/archive/model/ModelConfig.java#L40) in a model config YAML file. TorchServe calculates the expired timestamp of an incoming inference request if [clientTimeoutInMills](https://github.com/pytorch/serve/blob/614bfc0a382d809d5fd59d0dbc130c57e67c3332/frontend/archive/src/main/java/org/pytorch/serve/archive/model/ModelConfig.java#L40) is set, and drops the request once it is expired.

10. **Updated ping endpoint default behavior 2254 lxning**

Supported [maxRetryTimeoutInSec](https://github.com/pytorch/serve/blob/2f1f52f553e83703b5c380c2570a36708ee5cafa/frontend/archive/src/main/java/org/pytorch/serve/archive/model/ModelConfig.java#L35), which defines the max maximum time window of recovering a dead backend worker of a model, in model config YAML file. The default value is 5 min. Users are able to adjust it in model config YAML file. The [ping endpoint](https://github.com/pytorch/serve/blob/2f1f52f553e83703b5c380c2570a36708ee5cafa/docs/inference_api.md?plain=1#L26) returns 200 if all models have enough healthy workers (ie, equal or larger the minWorkers); otherwise returns 500.

New Examples

+ **[Example of Pippy](https://github.com/pytorch/serve/tree/master/examples/large_models/Huggingface_pippy) onboarding Open platform framework for distributed model inference #2215 HamidShojanazeri**

+ **[Example of DeepSpeed](https://github.com/pytorch/serve/tree/master/examples/large_models/deepspeed/opt) onboarding Open platform framework for distributed model inference #2218 lxning**

+ **[Example of Stable diffusion v2](https://github.com/pytorch/serve/tree/master/examples/diffusers) #2009 jagadeeshi2i**

Improvements

+ Upgraded to PyTorch 2.0 2194 agunapal

+ Enabled Core pinning in CPU nightly benchmark 2166 2237 min-jean-cho

TorchServe can be used with [Intel® Extension for PyTorch*](https://github.com/intel/intel-extension-for-pytorch) to give performance boost on Intel hardware. Intel® Extension for PyTorch* is a Python package extending PyTorch with up-to-date features optimizations that take advantage of AVX-512 Vector Neural Network Instructions (AVX512 VNNI), Intel® Advanced Matrix Extensions (Intel® AMX), and more.

Enabling core pinning in TorchServe CPU nightly benchmark shows significant performance speedup. This feature is implemented via a script under PyTorch Xeon backend, initiated from Intel® Extension for PyTorch*. To try out core pinning on your workload, add `cpu_launcher_enable=true` in `config.properties`.

To try out more optimizations with Intel® Extension for PyTorch*, install Intel® Extension for PyTorch* and add `ipex_enable=true` in `config.properties`.

+ Added Neuron nightly benchmark dashboard 2171 2167 namannandan

+ Enabled torch.compile support for torch 2.0.0 pre-release 2256 morgandu

+ Fixed torch.compile mac regression test 2250 msaroufim

+ Added configuration option to disable system metrics 2104 namannandan

+ Added regression test cases for SageMaker MME contract 2200 agunapal

In case of OOM , return error code 507 instead of generic code 503

+ Fixed Error thrown in KServe while loading multi-models 2235 jagadeeshi2i

+ Added Docker CI for TorchServe 2226 fabridamicelli

+ Change docker image release from dev to production 2227 agunapal

+ Supported building docker images with specified Python version 2154 agunapal

+ Model archiver optimizations:

a). Added wildcard file search in model archiver --extra-file 2142 gustavhartz

b). Added zip-store option to model archiver tool 2196 mreso

c). Made model archiver tests runnable from any directory 2191 mreso

d). Supported tgz format model decompression in TorchServe frontend 2214 lxning

+ Enabled batch processing in example scripted tokenizer 2130 mreso

+ Made handler tests callable with pytest 2173 mreso

+ Refactored sanity tests 2219 mreso

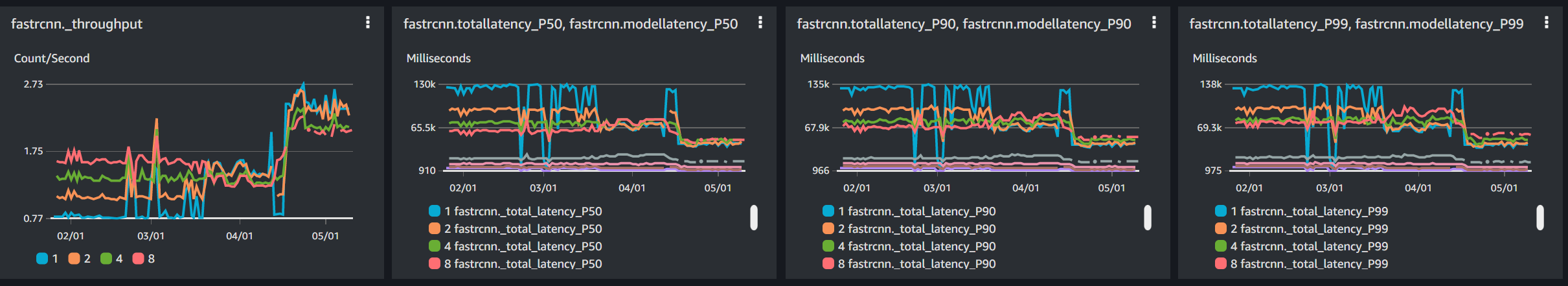

+ Improved benchmark tool 2228 and added auto-validation 2144 2157 agunapal

Automatically flag deviation of metrics from the average of last 30 runs

+ Added notification for CI jobs' (benchmark, regression test) failure agunapal

+ Updated CI to run on ubuntu 20.04 2153 agunapal

+ Added github code scanning codeql.yml 2149 msaroufim

+ freeze pynvml version to avoid crash in nvgpu 2138 mreso

+ Made pre-commit usage clearer in error message 2241 and upgraded isort version 2132 msaroufim

Dependency Upgrades

Documentation

+ Nvidia MPS integration study 2205 mreso

This study compares TPS b/w TorchServe with Nvidia MPS enabled and TorchServe without Nvidia MPS enabled on P3 and G4. It can help to the decision in enabling MPS for your deployment or not.

+ Updated TorchServe page on pytorch.org 2243 agunapal

+ Lint fixed broken windows Conda link 2240 msaroufim

+ Corrected example PT2 doc 2244 samils7

+ Fixed regex error in Configuration.md 2172 mpoemsl

+ Fixed dead Kubectl links 2160 msaroufim

+ Updated model file docs in example doc 2148 tmc

+ Example for serving TorchServe using docker 2118 agunapal

+ Updated walmart blog link 2117 agunapal

Platform Support

Ubuntu 16.04, Ubuntu 18.04, Ubuntu 20.04 MacOS 10.14+, Windows 10 Pro, Windows Server 2019, Windows subsystem for Linux (Windows Server 2019, WSLv1, Ubuntu 18.0.4). TorchServe now requires Python 3.8 and above, and JDK17.

GPU Support