🍱 We are excited to share with you that we have released BentoML `v1.2`, the biggest release since the launch of `v1.0`. This release includes improvements from all the learning and feedback from our community over the past year. We invite you to read our release [blog post](https://www.bentoml.com/blog/introducing-bentoml-1-2) for a comprehensive overview of the new features and the motivations behind their development.

Here are a few key points to note before we delve into the new features:

- `v1.2` ensures complete backward compatibility, meaning that Bentos built with `v1.1` will continue to function seamlessly with this release.

- We remain committed to supporting `v1.1`. Critical bug fixes and security updates will be backported to the `v1.1` branch.

- [BentoML documentation](https://docs.bentoml.com/en/latest/index.html) has been updated with examples and guides for `v1.2`. More guides are being added every week.

- BentoCloud is fully equipped to handle deployments from both `v1.1` and `v1.2` releases of BentoML.

⛏️ Introduced a [simplified service SDK](https://docs.bentoml.com/en/latest/guides/services.html) to empower developers with greater control and flexibility.

- Simplified the service and API interfaces as Python classes, allowing developers to add custom logic and use third party libraries flexibly with ease.

- Introduced `bentoml.service` and `bentoml.api` decorators to customize the behaviors of services and APIs.

- Moved configuration from YAML files to the service decorator `bentoml.service` next to the class definition.

- See this [example](https://github.com/bentoml/BentoVLLM) demonstrating the flexibility of the service API by initializing a vLLM AsyncEngine in the service constructor and run inference with continuous batching in the service API.

🔭 Revamped IO descriptors with more [familiar input and output types](https://docs.bentoml.com/en/latest/guides/iotypes.html).

- Enable use of Pythonic types directly, without the need for additional IO descriptor definitions or decorations.

- Integrated with Pydantic to leverage its robust validation capabilities and wide array of supported types.

- Expanded support to ML and Generative AI specific IO types.

📦 Updated [model saving and loading API](https://docs.bentoml.com/en/latest/guides/model-store.html) to be more generic to enable integration with more ML frameworks.

- Allow flexible saving and loading models using the `bentoml.models.create` API instead of framework specific APIs, e.g. `bentoml.pytorch.save_model`, `bentoml.tensorflow.save_model`.

🚚 Streamlined the [deployment workflow](https://docs.bentoml.com/en/latest/guides/deployment.html) to allow more rapid development iterations and a faster time to production.

- Enabled direct deployment to production through CLI and Python API from Git projects.

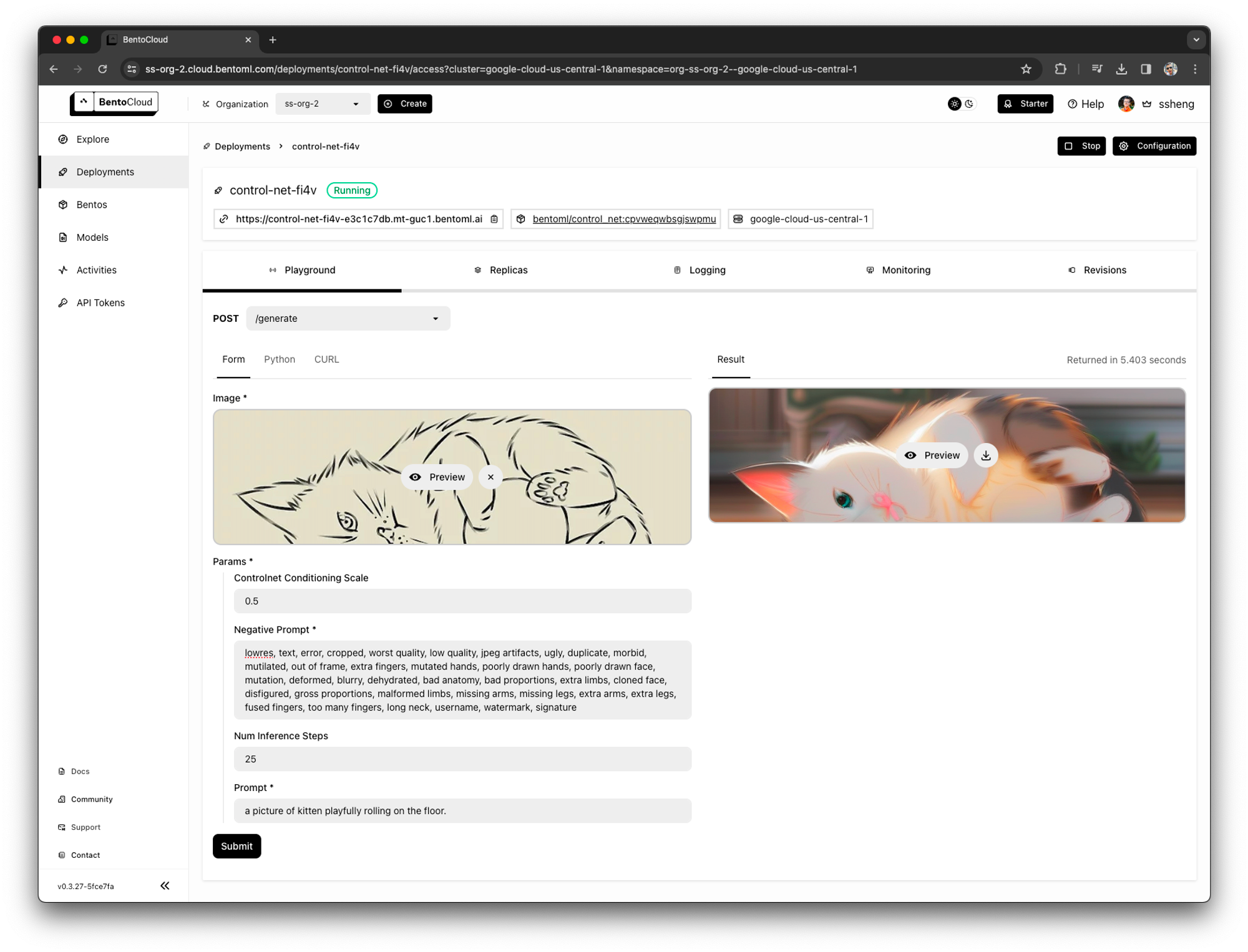

🎨 Improved API development experience with generated web UI and rich [Python client](https://docs.bentoml.com/en/latest/guides/clients.html).

- All bentos are now accompanied by a custom-generated UI in the BentoCloud Playground, tailored to their API definitions.

- BentoClient offers a Pythonic way to invoke the service endpoint, allowing parameters to be supplied in native Python format, letting the client efficiently handles the necessary serialization while ensuring compatibility and performance.

🎭 We've learned that the best way to showcase what BentoML can do is not through dry, conceptual documentation but through real-world examples. Check out our current list of examples, and we'll continue to publish new ones to the [gallery](https://bentoml.com/gallery) as exciting new models are released.

- [BentoVLLM](https://github.com/bentoml/BentoVLLM)

- [BentoControlNet](https://github.com/bentoml/BentoControlNet)

- [BentoSDXLTurbo](https://github.com/bentoml/BentoSDXLTurbo)

- [BentoWhisperX](https://github.com/bentoml/BentoWhisperX)

- [BentoXTTS](https://github.com/bentoml/BentoXTTS)

- [BentoCLIP](https://github.com/bentoml/BentoCLIP)

🙏 Thank you for your continued support!

What's Changed

* chore(deps): bump h2 from 0.3.20 to 0.3.24 in /grpc-client/rust by dependabot in https://github.com/bentoml/BentoML/pull/4434

* fix: Remove trailing character when building bento with API on Windows by holzweber in https://github.com/bentoml/BentoML/pull/4455

* fix: Replace backslahes by normal slashes, making bentoml pull possible on windows by holzweber in https://github.com/bentoml/BentoML/pull/4456

* fix(monitoring): Missing f string by jianshen92 in https://github.com/bentoml/BentoML/pull/4463

* feat: 1.2 staging by bojiang in https://github.com/bentoml/BentoML/pull/4366

* chore(deps): bump pdm-project/setup-pdm from 3 to 4 by dependabot in https://github.com/bentoml/BentoML/pull/4457

* fix(client): Convert string to Path if it isn't like a URL by frostming in https://github.com/bentoml/BentoML/pull/4469

* docs: Add Model Store doc by Sherlock113 in https://github.com/bentoml/BentoML/pull/4471

* docs: Clean up BentoCloud doc and add get started doc by Sherlock113 in https://github.com/bentoml/BentoML/pull/4472

* docs: Update the quickstart by Sherlock113 in https://github.com/bentoml/BentoML/pull/4474

New Contributors

* holzweber made their first contribution in https://github.com/bentoml/BentoML/pull/4455

**Full Changelog**: https://github.com/bentoml/BentoML/compare/v1.2.0rc1...v1.2.0