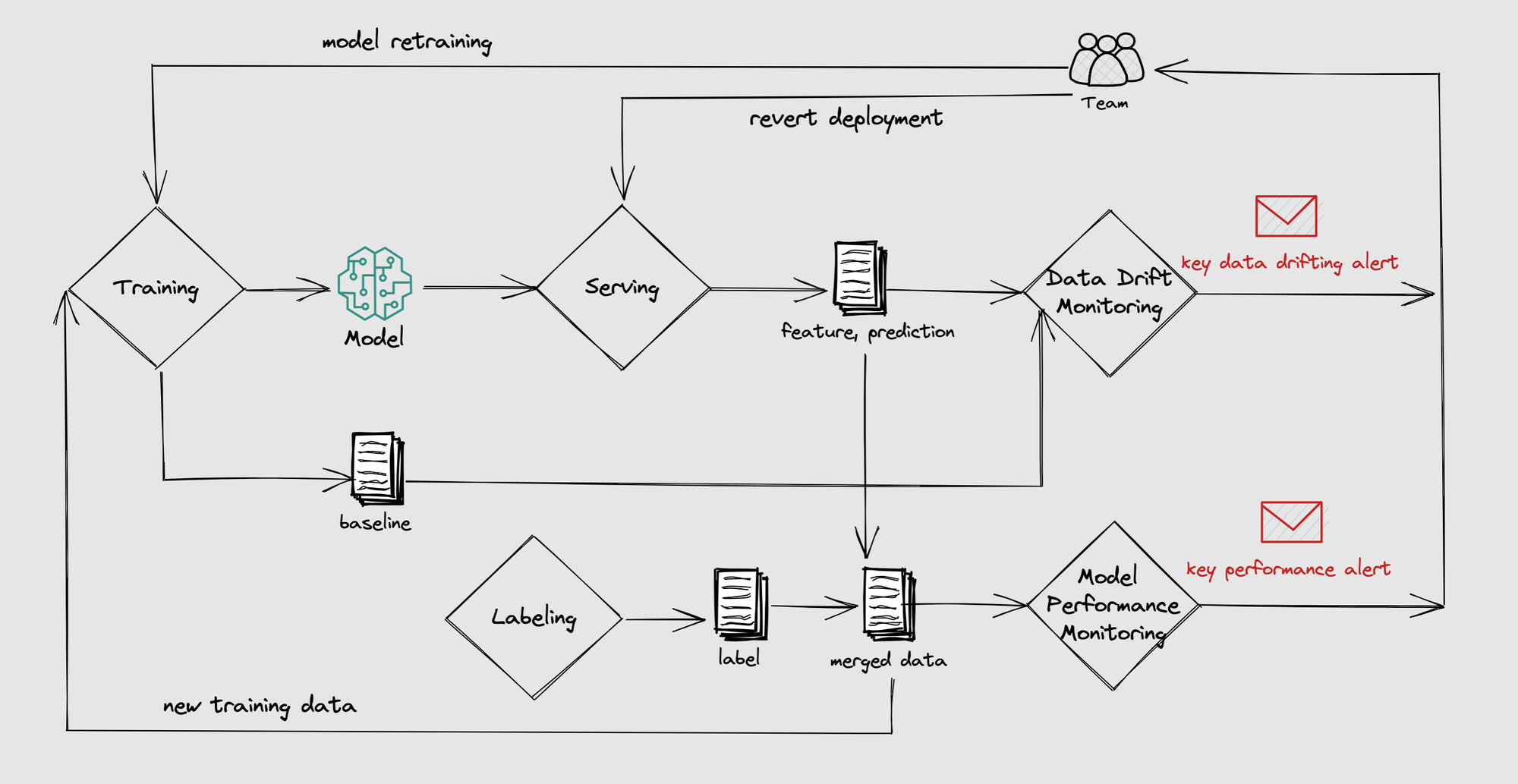

🍱 BentoML `v1.0.11` is here featuring the introduction of an [inference collection and model monitoring API](https://docs.bentoml.org/en/latest/guides/monitoring.html) that can be easily integrated with any model monitoring frameworks.

- Introduced the `bentoml.monitor` API for monitoring any features, predictions, and target data in numerical, categorical, and numerical sequence types.

python

import bentoml

from bentoml.io import Text

from bentoml.io import NumpyNdarray

CLASS_NAMES = ["setosa", "versicolor", "virginica"]

iris_clf_runner = bentoml.sklearn.get("iris_clf:latest").to_runner()

svc = bentoml.Service("iris_classifier", runners=[iris_clf_runner])

svc.api(

input=NumpyNdarray.from_sample(np.array([4.9, 3.0, 1.4, 0.2], dtype=np.double)),

output=Text(),

)

async def classify(features: np.ndarray) -> str:

with bentoml.monitor("iris_classifier_prediction") as mon:

mon.log(features[0], name="sepal length", role="feature", data_type="numerical")

mon.log(features[1], name="sepal width", role="feature", data_type="numerical")

mon.log(features[2], name="petal length", role="feature", data_type="numerical")

mon.log(features[3], name="petal width", role="feature", data_type="numerical")

results = await iris_clf_runner.predict.async_run([features])

result = results[0]

category = CLASS_NAMES[result]

mon.log(category, name="pred", role="prediction", data_type="categorical")

return category

- Enabled monitoring data collection through log file forwarding using any forwarders (fluentbit, filebeat, logstash) or OTLP exporter implementations.

- Configuration for monitoring data collection through log files.

yaml

monitoring:

enabled: true

type: default

options:

log_path: path/to/log/file

- Configuration for monitoring data collection through an OTLP exporter.

yaml

monitoring:

enable: true

type: otlp

options:

endpoint: http://localhost:5000

insecure: true

credentials: null

headers: null

timeout: 10

compression: null

meta_sample_rate: 1.0

- Supported third-party monitoring data collector integrations through BentoML Plugins. See [bentoml/plugins](https://github.com/bentoml/plugins) repository for more details.

🐳 Improved containerization SDK and CLI options, read more in [3164](https://github.com/bentoml/BentoML/pull/3164).

- Added support for multiple backend builder options (Docker, nerdctl, Podman, Buildah, Buildx) in addition to buildctl (standalone buildkit builder).

- Improved Python SDK for containerization with different backend builder options.

python

import bentoml

bentoml.container.build("iris_classifier:latest", backend="podman", features=["grpc","grpc-reflection"], **kwargs)

- Improved CLI to include the newly added options.

bash

bentoml containerize --help

- Standardized the generated Dockerfile in bentos to be compatible with all build tools for use cases that require building from a Dockerfile directly.

💡 We continue to update the documentation and examples on every release to help the community unlock the full power of BentoML.

- Learn more about [inference data collection and model monitoring](https://docs.bentoml.org/en/latest/guides/monitoring.html) capabilities in BentoML.

- Learn more about the [default metrics](https://docs.bentoml.org/en/latest/guides/metrics.html#default-metrics) that comes out-of-the-box and how to add [custom metrics](https://docs.bentoml.org/en/latest/guides/metrics.html#custom-metrics) in BentoML.

What's Changed

* chore: add framework utils functions directory by larme in https://github.com/bentoml/BentoML/pull/3203

* fix: missing f-string in tag validation error message by csh3695 in https://github.com/bentoml/BentoML/pull/3205

* chore(build_config): bypass exception when cuda and conda is specified by aarnphm in https://github.com/bentoml/BentoML/pull/3188

* docs: Update asynchronous API documentation by ssheng in https://github.com/bentoml/BentoML/pull/3204

* style: use relative import inside _internal/ by larme in https://github.com/bentoml/BentoML/pull/3209

* style: fix `monitoring` type error by aarnphm in https://github.com/bentoml/BentoML/pull/3208

* chore(build): add dependabot for pyproject.toml by aarnphm in https://github.com/bentoml/BentoML/pull/3139

* chore(deps): bump black[jupyter] from 22.8.0 to 22.10.0 in /requirements by dependabot in https://github.com/bentoml/BentoML/pull/3217

* chore(deps): bump pylint from 2.15.3 to 2.15.5 in /requirements by dependabot in https://github.com/bentoml/BentoML/pull/3212

* chore(deps): bump pytest-asyncio from 0.19.0 to 0.20.1 in /requirements by dependabot in https://github.com/bentoml/BentoML/pull/3216

* chore(deps): bump imageio from 2.22.1 to 2.22.4 in /requirements by dependabot in https://github.com/bentoml/BentoML/pull/3211

* fix: don't index ContextVar at runtime by sauyon in https://github.com/bentoml/BentoML/pull/3221

* chore(deps): bump pyarrow from 9.0.0 to 10.0.0 in /requirements by dependabot in https://github.com/bentoml/BentoML/pull/3214

* chore: configuration check for development by aarnphm in https://github.com/bentoml/BentoML/pull/3223

* fix bento create by quandollar in https://github.com/bentoml/BentoML/pull/3220

* fix(docs): missing `table` tag by nyongja in https://github.com/bentoml/BentoML/pull/3231

* docs: grammar corrections by tbazin in https://github.com/bentoml/BentoML/pull/3234

* chore(deps): bump pytest-asyncio from 0.20.1 to 0.20.2 in /requirements by dependabot in https://github.com/bentoml/BentoML/pull/3238

* chore(deps): bump pytest-xdist[psutil] from 2.5.0 to 3.0.2 by dependabot in https://github.com/bentoml/BentoML/pull/3245

* chore(deps): bump pytest from 7.1.3 to 7.2.0 in /requirements by dependabot in https://github.com/bentoml/BentoML/pull/3237

* chore(deps): bump build[virtualenv] from 0.8.0 to 0.9.0 in /requirements by dependabot in https://github.com/bentoml/BentoML/pull/3240

* deps: bumping gRPC and OTLP dependencies by aarnphm in https://github.com/bentoml/BentoML/pull/3228

* feat(file): support custom mime type for file proto by aarnphm in https://github.com/bentoml/BentoML/pull/3095

* fix: multipart for client by sauyon in https://github.com/bentoml/BentoML/pull/3253

* fix(json): make sure to parse a list of dict for_sample by aarnphm in https://github.com/bentoml/BentoML/pull/3229

* chore: move test proto to internal tests only by aarnphm in https://github.com/bentoml/BentoML/pull/3255

* fix(framework): external_modules for loading pytorch by bojiang in https://github.com/bentoml/BentoML/pull/3254

* feat(container): builder implementation by aarnphm in https://github.com/bentoml/BentoML/pull/3164

* feat(sdk): implement otlp monitoring exporter by bojiang in https://github.com/bentoml/BentoML/pull/3257

* chore(grpc): add missing __init__.py by aarnphm in https://github.com/bentoml/BentoML/pull/3259

* docs(metrics): Update docs for the default metrics by ssheng in https://github.com/bentoml/BentoML/pull/3262

* chore: generate plain dockerfile without buildkit syntax by aarnphm in https://github.com/bentoml/BentoML/pull/3261

* style: remove ` type: ignore` by aarnphm in https://github.com/bentoml/BentoML/pull/3265

* fix: lazy load ONNX utils by aarnphm in https://github.com/bentoml/BentoML/pull/3266

* fix(pytorch): pickle is the unpickler of cloudpickle by bojiang in https://github.com/bentoml/BentoML/pull/3269

* fix: instructions for missing sklearn dependency by benjamintanweihao in https://github.com/bentoml/BentoML/pull/3271

* docs: ONNX signature docs by larme in https://github.com/bentoml/BentoML/pull/3272

* chore(deps): bump pyarrow from 10.0.0 to 10.0.1 by dependabot in https://github.com/bentoml/BentoML/pull/3273

* chore(deps): bump pylint from 2.15.5 to 2.15.6 by dependabot in https://github.com/bentoml/BentoML/pull/3274

* fix(pandas): only set columns when `apply_column_names` is set by mqk in https://github.com/bentoml/BentoML/pull/3275

* feat: configuration versioning by aarnphm in https://github.com/bentoml/BentoML/pull/3052

* fix(container): support comma in docker env by larme in https://github.com/bentoml/BentoML/pull/3285

* chore(stub): `import filetype` by aarnphm in https://github.com/bentoml/BentoML/pull/3260

* fix(container): ensure to stream logs when `DOCKER_BUILDKIT=0` by aarnphm in https://github.com/bentoml/BentoML/pull/3294

* docs: update instructions for containerize message by aarnphm in https://github.com/bentoml/BentoML/pull/3289

* fix: unset `NVIDIA_VISIBLE_DEVICES` when cuda image is used by aarnphm in https://github.com/bentoml/BentoML/pull/3298

* fix: multipart logic by sauyon in https://github.com/bentoml/BentoML/pull/3297

* chore(deps): bump pylint from 2.15.6 to 2.15.7 by dependabot in https://github.com/bentoml/BentoML/pull/3291

* docs: wrong arguments when saving by KimSoungRyoul in https://github.com/bentoml/BentoML/pull/3306

* chore(deps): bump pylint from 2.15.7 to 2.15.8 in /requirements by dependabot in https://github.com/bentoml/BentoML/pull/3308

* chore(deps): bump pytest-xdist[psutil] from 3.0.2 to 3.1.0 in /requirements by dependabot in https://github.com/bentoml/BentoML/pull/3309

* chore(pyproject): bumping python version typeshed to 3.11 by aarnphm in https://github.com/bentoml/BentoML/pull/3281

* fix(monitor): disable validate for Formatter by bojiang in https://github.com/bentoml/BentoML/pull/3317

* doc(monitoring): monitoring guide by bojiang in https://github.com/bentoml/BentoML/pull/3300

* feat: parsing path for env by aarnphm in https://github.com/bentoml/BentoML/pull/3314

* fix: remove assertion for dtype by aarnphm in https://github.com/bentoml/BentoML/pull/3320

* feat: client lazy load by aarnphm in https://github.com/bentoml/BentoML/pull/3323

* chore: provides shim for bentoctl by aarnphm in https://github.com/bentoml/BentoML/pull/3322

New Contributors

* csh3695 made their first contribution in https://github.com/bentoml/BentoML/pull/3205

* nyongja made their first contribution in https://github.com/bentoml/BentoML/pull/3231

* tbazin made their first contribution in https://github.com/bentoml/BentoML/pull/3234

* KimSoungRyoul made their first contribution in https://github.com/bentoml/BentoML/pull/3306

**Full Changelog**: https://github.com/bentoml/BentoML/compare/v1.0.10...v1.0.11