Data integration

New sources

- [Facebook Ads](https://github.com/mage-ai/mage-ai/blob/master/mage_integrations/mage_integrations/sources/facebook_ads/README.md)

- [HubSpot](https://github.com/mage-ai/mage-ai/blob/master/mage_integrations/mage_integrations/sources/hubspot/README.md)

- [Postmark](https://github.com/mage-ai/mage-ai/blob/master/mage_integrations/mage_integrations/sources/postmark/README.md)

Improvements on existing sources and destinations

- S3 source

- Automatically add `_s3_last_modified` column from LastModified key, and enable `_s3_last_modified` column as a bookmark property.

- Allow filtering objects using regex syntax by configuring `search_pattern` key.

- Support multiple streams by configuring a list of table configs in `table_configs` key. [https://github.com/mage-ai/mage-ai/blob/master/mage_integrations/mage_integrations/sources/amazon_s3/README.md](https://github.com/mage-ai/mage-ai/blob/master/mage_integrations/mage_integrations/sources/amazon_s3/README.md)

- Postgres source log based replication

- Automatically add a `_mage_deleted_at` column to record the source row deletion time.

- When operation is update and unique conflict method is ignore, create a new record in destination.

- In source or destination yaml config, interpolate secret values from AWS Secrets Manager using syntax `{{ aws_secret_var('some_name_for_secret') }}` . Here is the full guide: [https://docs.mage.ai/production/configuring-production-settings/secrets#yaml](https://docs.mage.ai/production/configuring-production-settings/secrets#yaml)

Full lists of available sources and destinations can be found here:

- Sources: [https://docs.mage.ai/data-integrations/overview#available-sources](https://docs.mage.ai/data-integrations/overview#available-sources)

- Destinations: [https://docs.mage.ai/data-integrations/overview#available-destinations](https://docs.mage.ai/data-integrations/overview#available-destinations)

Customize pipeline alerts

Customize alerts to only send when pipeline fails or succeeds (or both) via `alert_on` config

yaml

notification_config:

alert_on:

- trigger_failure

- trigger_passed_sla

- trigger_success

Here are the guides for configuring the alerts

- Email alerts: [https://docs.mage.ai/production/observability/alerting-email#create-notification-config](https://docs.mage.ai/production/observability/alerting-email#create-notification-config)

- Slack alerts: [https://docs.mage.ai/production/observability/alerting-slack#update-mage-project-settings](https://docs.mage.ai/production/observability/alerting-slack#update-mage-project-settings)

- Teams alerts: [https://docs.mage.ai/production/observability/alerting-teams#update-mage-project-settings](https://docs.mage.ai/production/observability/alerting-teams#update-mage-project-settings)

Deploy Mage on AWS using AWS Cloud Development Kit (CDK)

Besides using Terraform scripts to deploy Mage to cloud, Mage now also supports managing AWS cloud resources using [AWS Cloud Development Kit](https://aws.amazon.com/cdk/) in Typescript.

Follow this [guide](https://github.com/mage-ai/mage-ai-cdk/tree/master/typescript) to deploy Mage app to AWS using AWS CDK scripts.

Stitch integration

Mage can orchestrate the sync jobs in Stitch via API integration. Check out the [guide](https://docs.mage.ai/integrations/stitch) to learn about how to trigger the jobs in Stitch and poll statuses of the jobs.

Bug fixes & polish

- Allow pressing `escape` key to close error message popup instead of having to click on the `x` button in the top right corner.

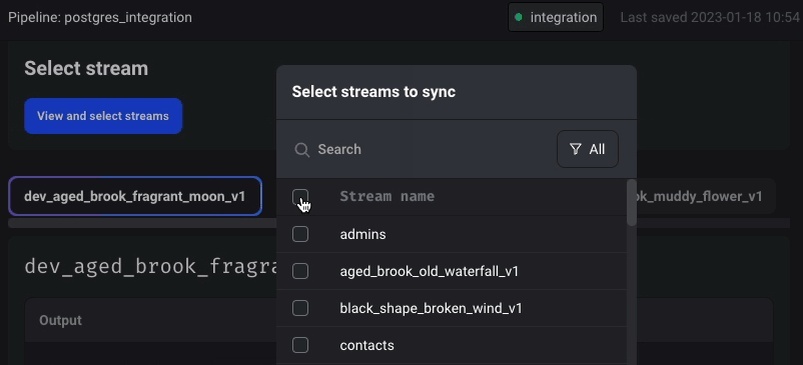

- If a data integration source has multiple streams, select all streams with one click instead of individually selecting every single stream.

- Make pipeline runs pages (both the overall `/pipeline-runs` and the trigger `/pipelines/[trigger]/runs` pages) more efficient by avoiding individual requests for pipeline schedules (i.e. triggers).

- In order to avoid confusion when using the drag and drop feature to add dependencies between blocks in the dependency tree, the ports (white circles on blocks) on other blocks disappear when the drag feature is active. The dependency lines must be dragged from one block’s port onto another block itself, not another block’s port, which is what some users were doing previously.

- Fix positioning of newly added blocks. Previously when adding a new block with a custom block name, the blocks were being added to the bottom of the pipeline, so these new blocks should appear immediately after the block where it was added now.

- Popup error messages include both the stack trace and traceback to help with debugging (previously did not include the traceback).

- Update links to docs in code block comments (links were broken due to recent docs migration to a different platform).

View full [Changelog](https://www.notion.so/mageai/What-s-new-7cc355e38e9c42839d23fdbef2dabd2c)