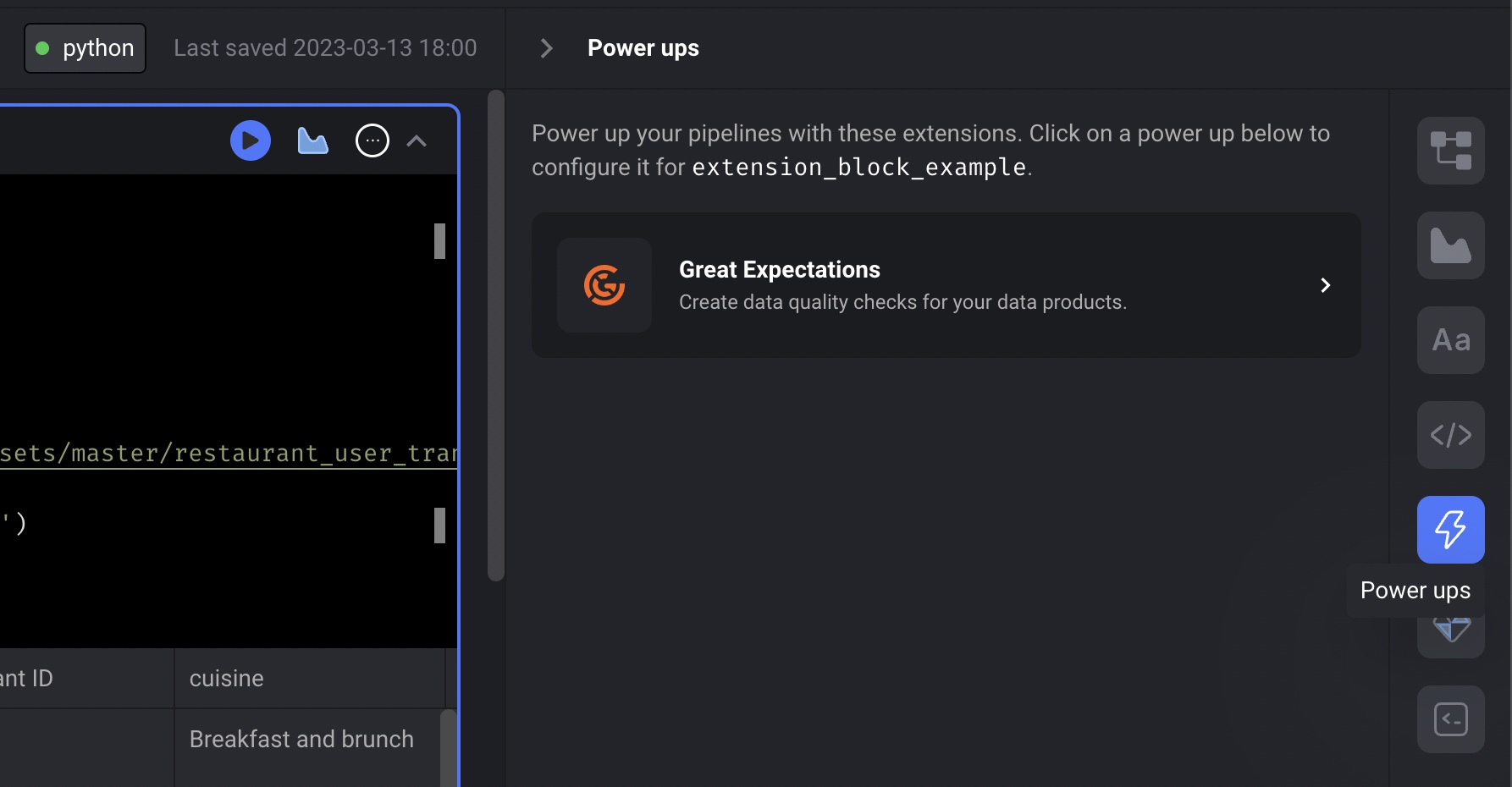

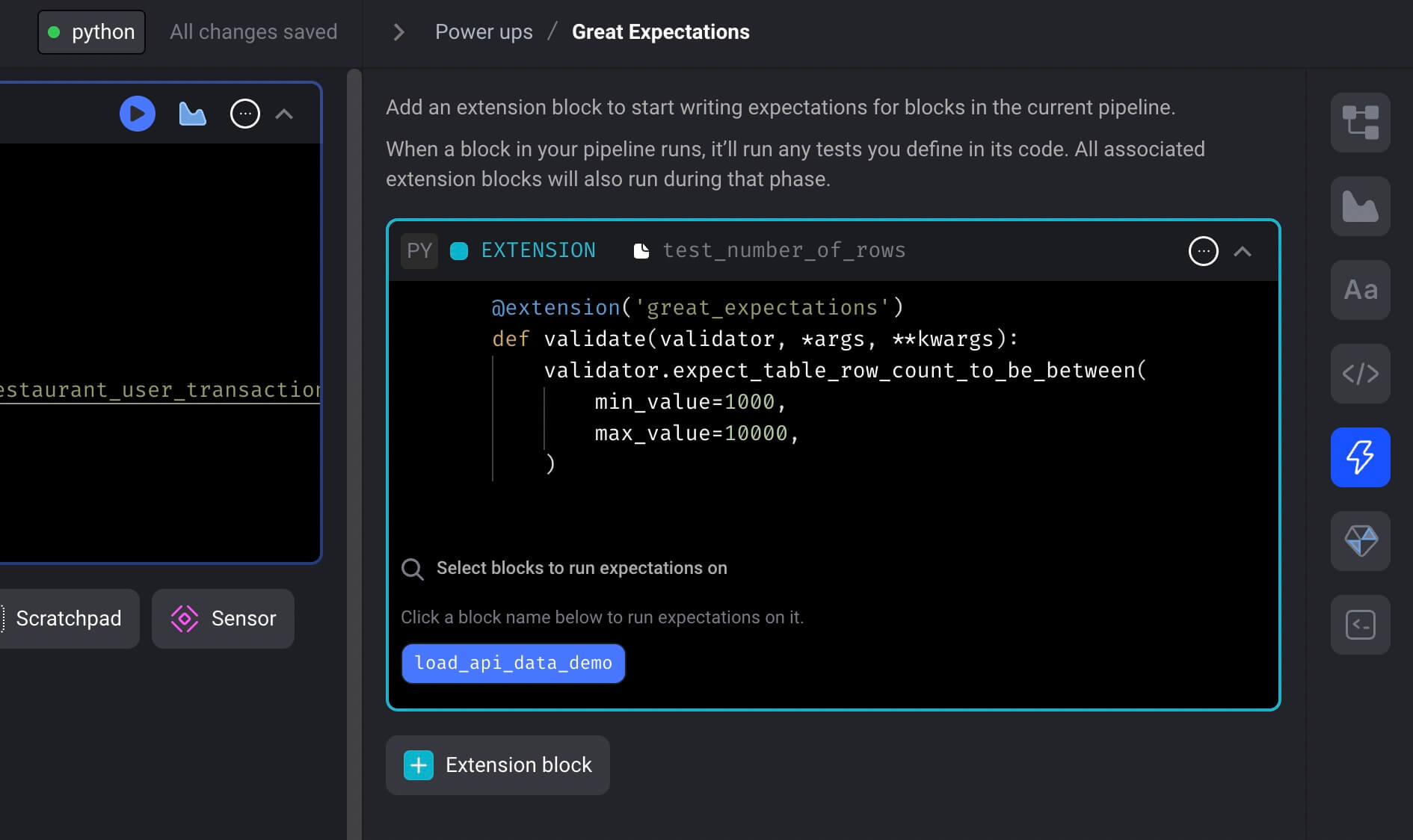

Great Expectations integration

Mage is now integrated with [Great Expectations](https://greatexpectations.io/) to test the data produced by pipeline blocks.

You can use all the [expectations](https://greatexpectations.io/expectations/) easily in your Mage pipeline to ensure your data quality.

Follow the [doc](https://docs.mage.ai/development/testing/great-expectations) to add expectations to your pipeline to run tests for your block output.

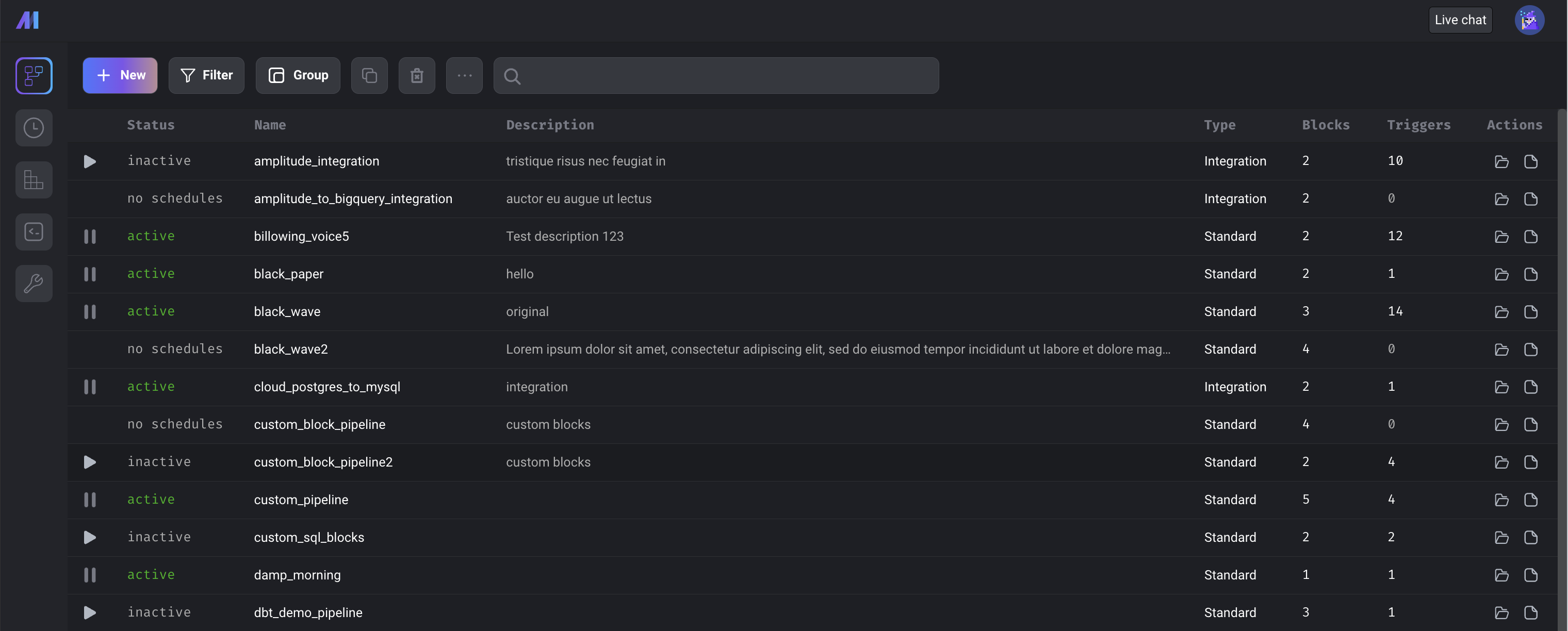

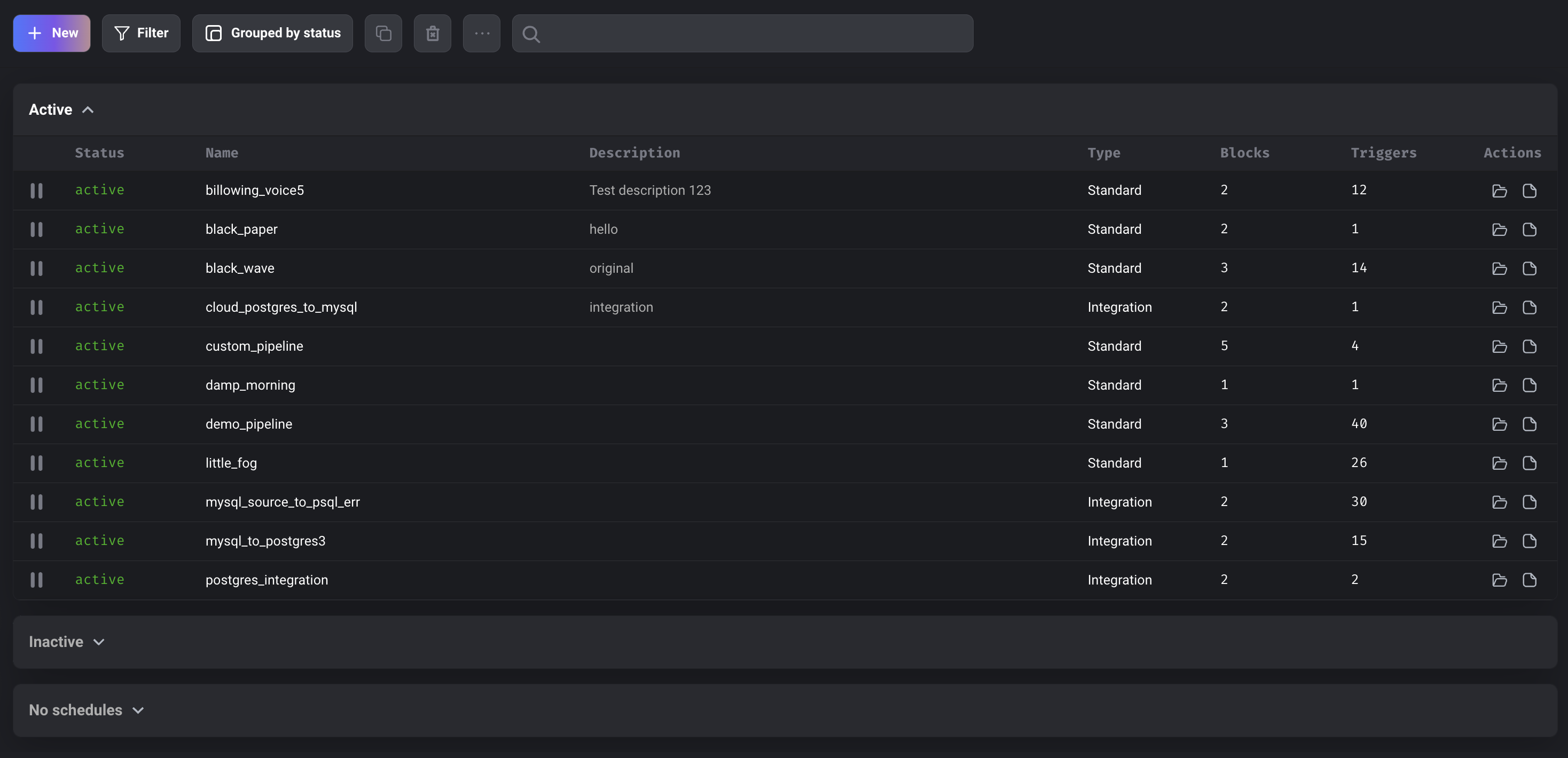

Pipeline dashboard updates

- Added pipeline description.

- Single click on a row no longer opens a pipeline. In order to open a pipeline now, users can double-click a row, click on the pipeline name, or click on the open folder icon at the end of the row.

- Select a pipeline row to perform an action (e.g. clone, delete, rename, or edit description).

- **Clone pipeline** (icon with 2 overlapping squares) - Cloning the selected pipeline will create a new pipeline with the same configuration and code blocks. The blocks use the same block files as the original pipeline. Pipeline triggers, runs, backfills, and logs are not copied over to the new pipeline.

- **Delete pipeline** (trash icon) - Deletes selected pipeline

- **Rename pipeline** (item in dropdown menu under ellipsis icon) - Renames selected pipeline

- **Edit description** (item in dropdown menu under ellipsis icon) - Edits pipeline description. Users can hover over the description in the table to view more of it.

- Users can click on the file icon under the `Actions` column to go directly to the pipeline's logs.

- Added search bar which searches for text in the pipeline `uuid`, `name`, and `description` and filters the pipelines that match.

- The create, update, and delete actions are not accessible by Viewer roles.

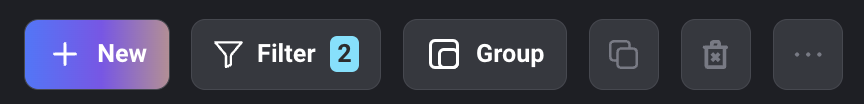

- Added badge in Filter button indicating number of filters applied.

- Group pipelines by `status` or `type`.

SQL block improvements

Toggle SQL block to not create table

Users can write raw SQL blocks and only include the `INSERT` statement. `CREATE TABLE` statement isn’t required anymore.

Support writing SELECT statements in SQL using raw SQL

Users can write `SELECT` statements using raw SQL in SQL blocks now.

Find all supported SQL statements using raw SQL in this [doc](https://docs.mage.ai/guides/sql-blocks#required-sql-statements).

Support for ssh tunnel in multiple blocks

When using [SSH tunnel](https://docs.mage.ai/integrations/databases/PostgreSQL#ssh-tunneling) to connect to Postgres database, SSH tunnel was originally only supported in block run at a time due to port conflict. Now Mage supports SSH tunneling in multiple blocks by finding the unused port as the local port. This feature is also supported in Python block when using `mage_ai.io.postgres` module.

Data integration pipeline

New source: Pipedrive

Shout out to [Luis Salomão](https://github.com/Luishfs) for his continuous contribution to Mage. The new source [Pipedrive](https://github.com/mage-ai/mage-ai/tree/master/mage_integrations/mage_integrations/sources/pipedrive) is available in Mage now.

Fix BigQuery “query too large” error

Add check for size of query since that can potentially exceed the limit.

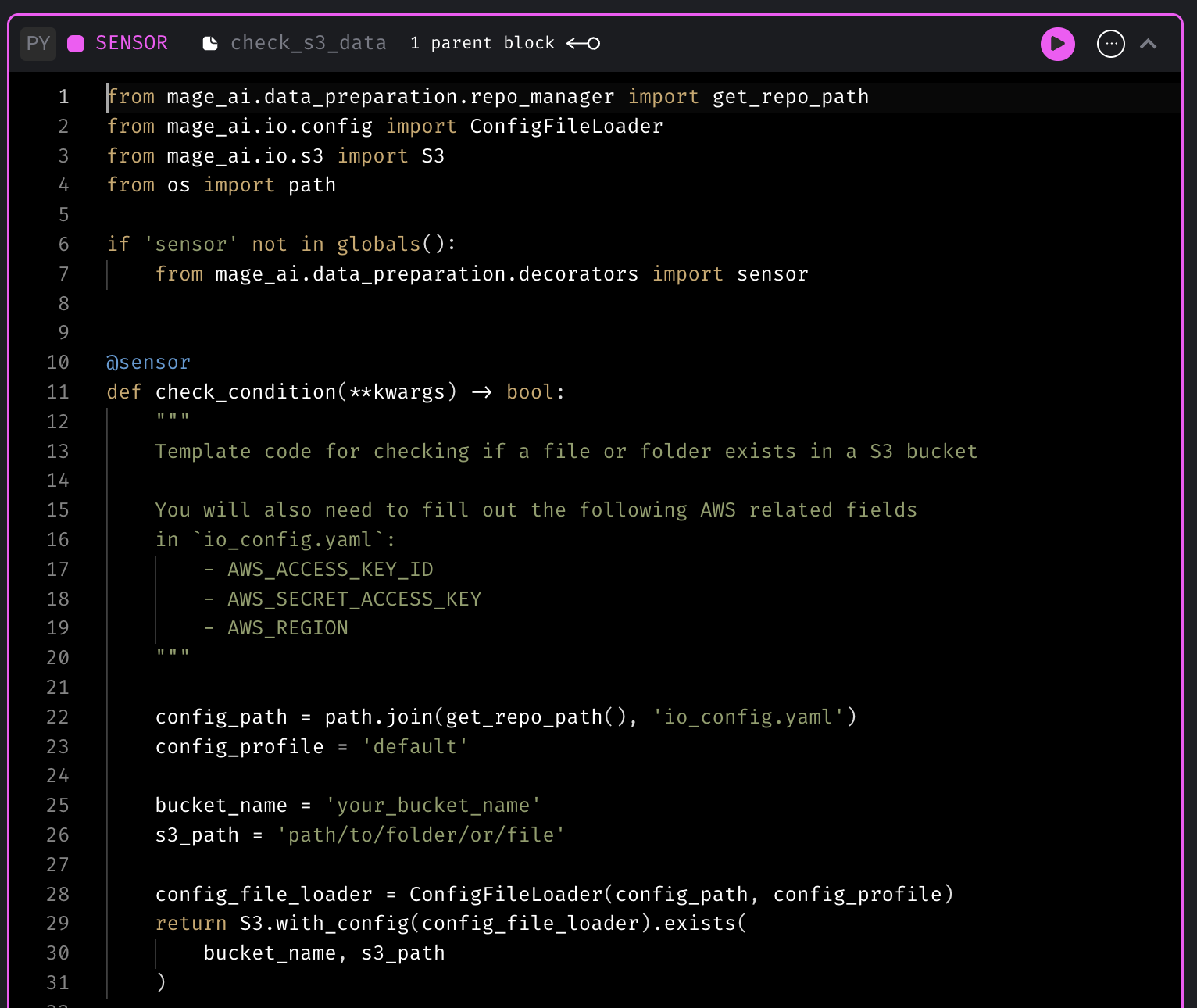

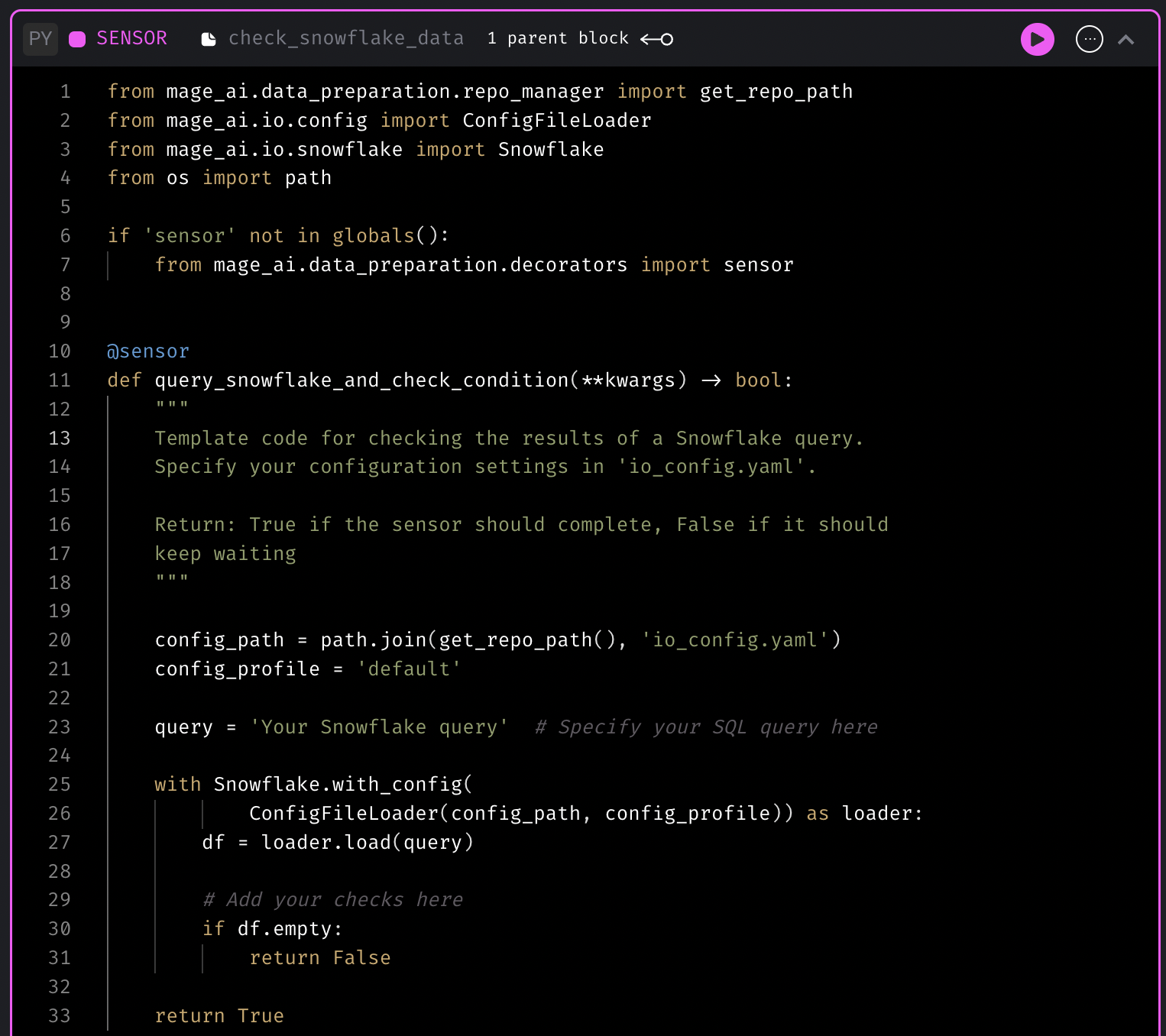

New sensor templates

[Sensor](https://docs.mage.ai/design/blocks/sensor) block is used to continuously evaluate a condition until it’s met. Mage now has more sensor templates to check whether data lands in S3 bucket or SQL data warehouses.

Sensor template for checking if a file exists in S3

Sensor template for checking the data in SQL data warehouse

Support for Spark in standalone mode (self-hosted)

Mage can connect to a standalone Spark cluster and run PySpark code on it. You can set the environment variable `SPARK_MASTER_HOST` in your Mage container or instance. Then running PySpark code in a standard batch pipeline will work automagically by executing the code in the remote Spark cluster.

Follow this [doc](https://docs.mage.ai/integrations/spark-pyspark#standalone-spark-cluster) to set up Mage to connect to a standalone Spark cluster.

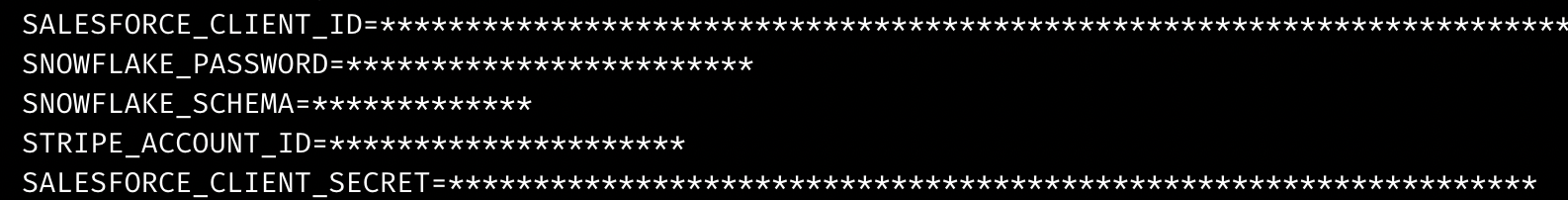

Mask environment variable values with stars in output

Mage now automatically masks environment variable values with stars in terminal output or block output to prevent showing sensitive data in plaintext.

Other bug fixes & polish

- Improve streaming pipeline logging

- Show streaming pipeline error logging

- Write logs to multiple files

- Provide the working NGINX config to allow Mage WebSocket traffic.

bash

location / {

proxy_pass http://127.0.0.1:6789;

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "Upgrade";

proxy_set_header Host $host;

}

- Fix raw SQL quote error.

- Add documentation for developer to add a new source or sink to streaming pipeline: [https://docs.mage.ai/guides/streaming/contributing](https://docs.mage.ai/guides/streaming/contributing)

View full [Changelog](https://www.notion.so/mageai/What-s-new-7cc355e38e9c42839d23fdbef2dabd2c)